AI tools are becoming the first place people go to get financial advice and recommendations, not search engines.

But while most brands are still focused on ranking in Google, the answers showing up in ChatGPT, Gemini, Claude, and Perplexity are coming from somewhere else entirely.

So, who is AI citing when it comes to consumer banking? And why does it matter? Don’t worry, Goodie has all the answers you’re looking for. Let’s get started with why this study matters.

When people look to AI for answers about banking products, payment platforms, or how to move their money, the models aren’t pulling insights from just anywhere. They’re citing the sources they trust most, the crème de la crème of the internet.

We wanted to pinpoint exactly which websites dominate that trust. Which domains consistently surface in AI-generated answers, specifically in consumer banking? And who’s capturing the lion’s share of visibility in the finance space right now?

Answering that means going deeper than looking at plain rankings and brand mentions. We need to see exactly where the citations are coming from and how often. That’s where our methodology comes in.

Between February and June 2025, we isolated over 109,000 citations (out of 5.7 million, mind you) tied to prompts about banking, payments, checkout, and money transfers.

We deduplicated links down to their root domains (shoutout PCMag, who was really trying to show up) tagged each site by content type, and calculated two key metrics:

But it’s not just about understanding which types of content and websites GenAI tends to favor; it’s also about helping brands answer a question marketers are getting asked more and more:

“Where do we need to get mentioned to show up in AI search?”

This data gives us a starting point. If AI tools are referencing specific domains when answering finance-related questions, brands need to understand who those sources are and how to earn a spot in the mix.

But crunching the numbers was just step one. The real story is in who comes out on top. If AI is repeatedly pulling from the same small set of domains to answer finance-related questions, that’s your roadmap for where to earn mentions of your own.

So, who’s winning AI visibility in consumer banking right now? Let’s take a look.

These are the top 10 domains that showed up the most often in AI answers related to banking, payment, and money prompts, ranked by citation frequency and how many models cited them:

You can’t show up in AI answers if models aren’t citing you. The top-cited domains aren’t just user-friendly, they’re embedded in the content that AI is leaning on to answer users’ questions. For finance brands, visibility depends less on what you publish and more on where and how often you’re mentioned.

Not all models think alike.

Even when answering the same kinds of questions like “best payment platforms”, “how to transfer money online”, or “which banks are safest”, different tools pull from very different sources.

Some over-index on news organizations. Others lean into product reviews or crowd wisdom. Understanding these nuances matters if you’re not just trying to show up in one LLM or in one place, but across all LLMs, for multiple queries (hint, hint; you do).

Before we dive into each model, let’s think big picture: some domains are power players across all four LLMs, others only dominate one. To make this easier to see, here’s a map of the domains by two key factors:

Here’s our quick take: Across all four LLMs, a handful of “Power Players” like NerdWallet, CNBC, Forbes, and Investopedia dominate both volume and consistency. Others, like PCMag and Bankrate, win in specific models but not everywhere, while places like Wikipedia and Reddit punch above their weight for niche or community-driven queries.

Each of the four models we analyzed, ChatGPT, Gemini, Claude, and Perplexity, has its own fingerprint when it comes to which domains it trusts most in the finance space.

Here’s a snapshot of what was surfaced:

First, some general findings:

This is more than an academic breakdown, people. It’s a map to outline your strategy with.

If you want consistent AI visibility, it’s not enough to be cited in just one model. Brands need to understand how each model evaluates trust, and diversify where (and how) they earn mentions accordingly.

Otherwise? You might be killing it in ChatGPT, but be completely invisible in Perplexity, or vice versa.

Next: We break down the content formats that LLMs cite most often and why those patterns matter for your brand’s placement.

When the leading AI models generate answers about money, they’re not pulling at random. They lean on patterns. Certain types of content get cited again and again across models because they align with how LLMs evaluate relevance, trust, and usability.

Here’s what we saw the most and why they’re winning strategies:

Structured comparisons, clear product details, and commercial intent signals (“best”, “cheapest,” “alternatives”). Frequently updated and optimized for crawlability.

Timely reporting, high editorial standards, and market/regulation coverage that adds credibility.

Clean definitions, glossary-style explainers, and interconnected topic coverage for explanatory queries.

Real-world experiences, community opinions, and edge cases that more official sources often miss.

The takeaway? If your content doesn’t map to one of these formats, you’re at a disadvantage. Which brings us to the next question: how can brands adapt to earn a spot in this mix?

If you're trying to increase your AI visibility, you gotta think outside of the box in which your content lives, and start thinking about where your brand is actually getting mentioned.

A few key takeaways:

And here’s the catch: not all models value the same sources. What works in ChatGPT might flop in Perplexity. That’s why we broke down how each model is playing by its own rules.

While some domains like NerdWallet (we’re not sponsored, it’s the data, we swear!) show up across the board, each GenAI model still has its own preferences, and those differences matter.

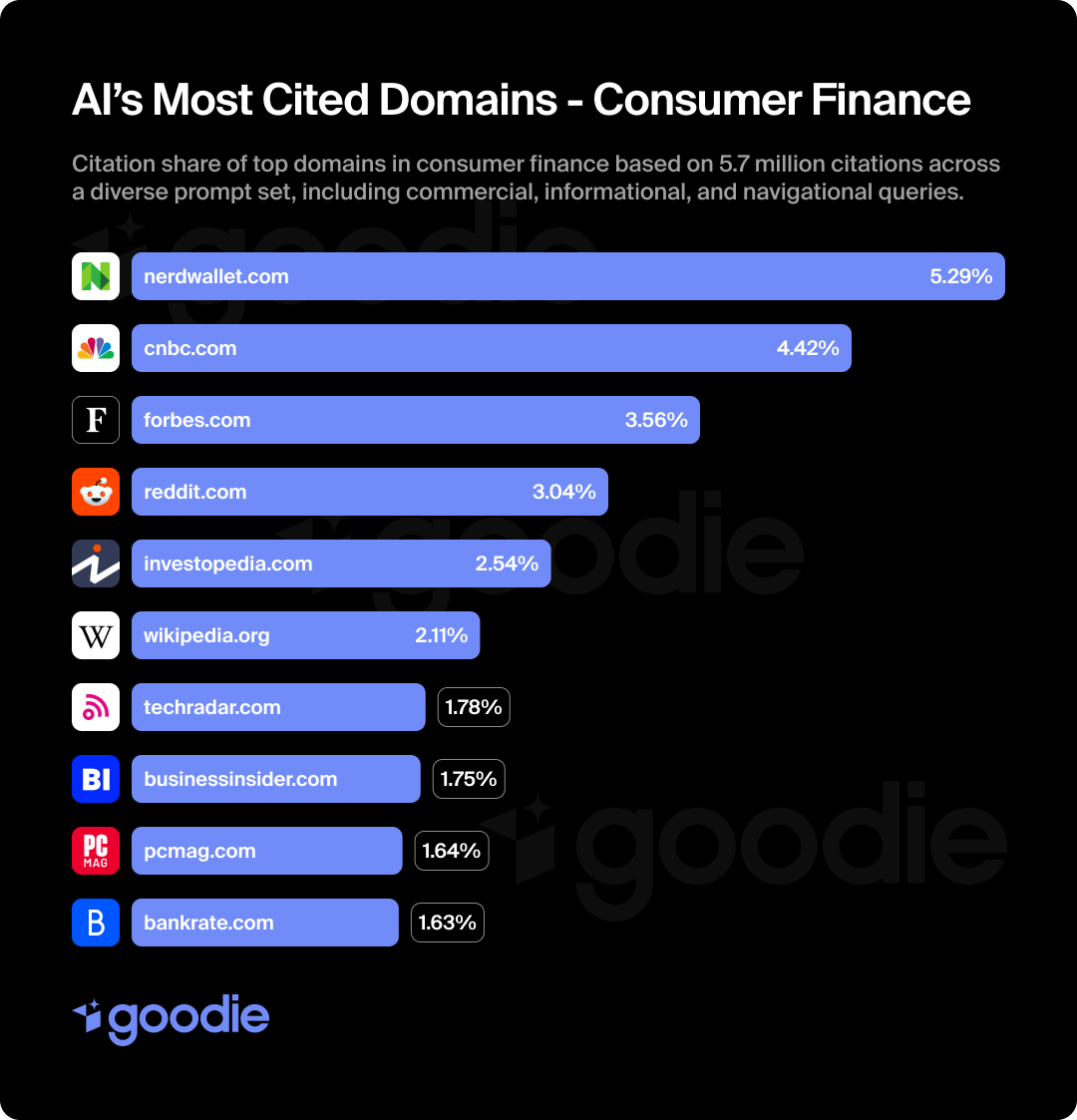

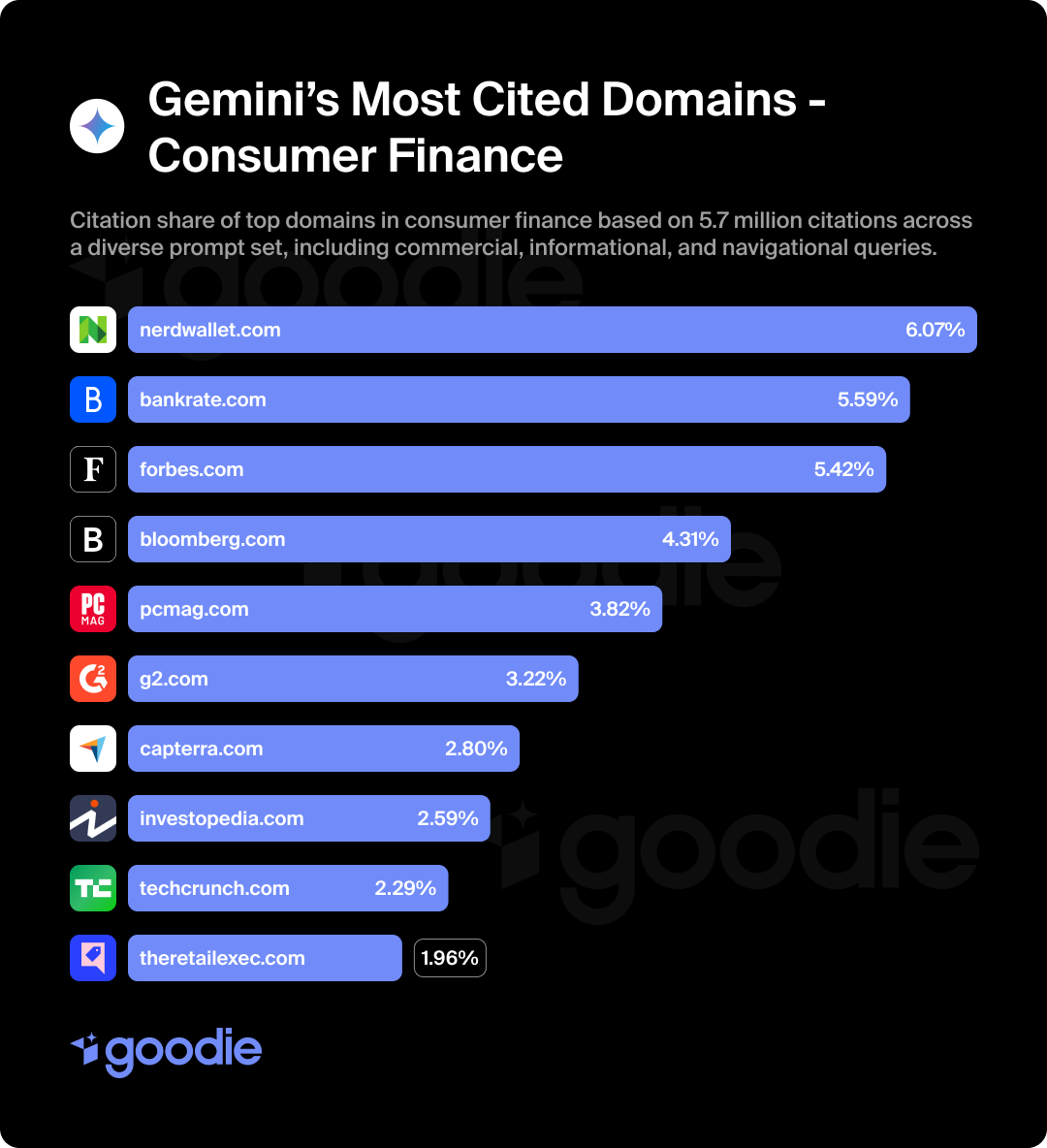

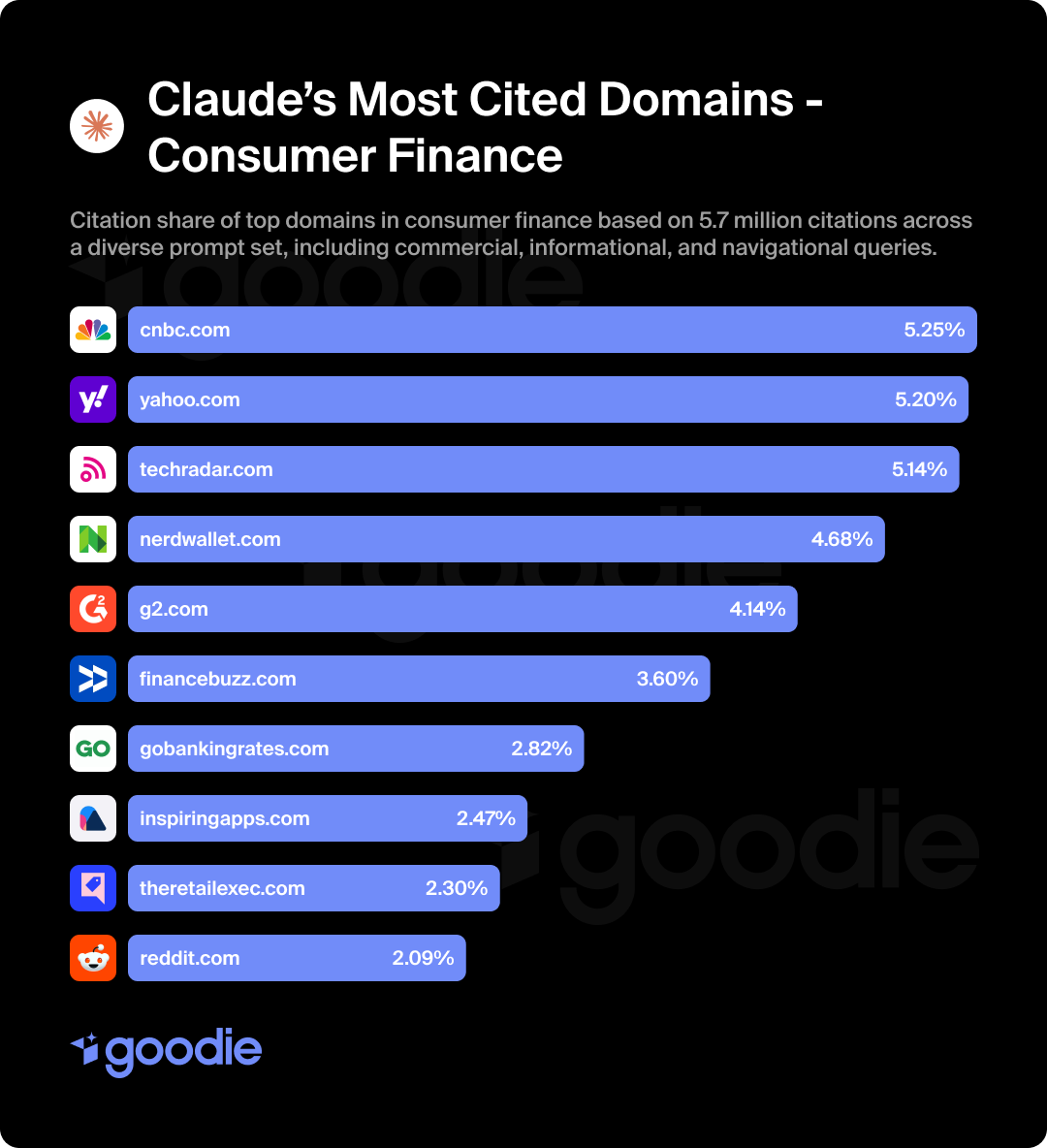

If you’re only monitoring performance on one platform, you might be missing some major blind spots. Here’s what the top 10 most-cited domains were per model (not overall), based on our analysis of finance-related prompts.

Takeaway: ChatGPT favors traditional publishers, high-quality references, and sites with structured financial explainers. Strong editorial credibility wins here.

Takeaway: Gemini leans into affiliate and review-heavy content, especially in commercially relevant queries. Less emphasis on traditional publishers, more on product-focused utility.

Takeaway: Claude strongly leans toward affiliate-style product content, but still respects high-authority news organizations. Reddit also makes an appearance for more personal query styles.

Takeaway: Perplexity leans harder into UGC and affiliate content than any other model. Less institutional, more practical, and people-first.

This is tactical information. Different models surface different sources depending on what kind of content they prioritize. Think of it like this: if you’re swimming in visibility in ChatGPT, you might not be reaching your full audience; especially if they’re mostly searching on Perplexity.

Diversified AI visibility = diversified content placement.

And this doesn’t mean publishing on your own website more often or creating articles 3x their original length. You need to show up in the right ecosystems, with the right kind of mentions.

You won’t be able to break into being a source by doing what you’ve always done.

The brands showing up aren’t just publishing content. They’re producing structured, high-signal material that gets referenced by the rest of the internet. If you want that prime source spot in AI results, it’s less about dominating your own domain and more about being citation-worthy across the board.

The bottom line: You don’t need to be the biggest brand. But you do need to be useful in the right places. Visibility in AI search doesn’t come from being the loudest; it’s from being cited the most.

If you’ve been following along, you know this already: no major banks made the top 10.

Not in ChatGPT. Not in Gemini. Not in Perplexity. Not in Claude. Across all four models, we didn’t see a single traditional bank or neobank platform show up with any meaningful influence. Not even the ones with massive content teams or aggressive paid strategies.

This is a wake-up call.

The winning sources aren’t the biggest brands, they’re just the most citable. Here’s what they have in common:

Owning the product doesn’t mean you earn visibility in AI. You earn it by being cited in the content AI trusts to answer questions about that product.

The blog that you post on 3x per week simply isn’t enough. AI models are rarely citing brand pages, and instead pull from third-party content. Owning Google rankings doesn’t guarantee AI visibility, either, because the rules are different. If models cite NerdWallet for “best checking account for teens” and you’re not in that piece, then you’re basically invisible.

What once were nice-to-haves (affiliate placements, PR coverage, roundups, and product reviews) are now core to AI visibility. If you want to show up, your brand must live inside the answers. This means:

We built this study to understand who’s showing up in AI answers and, more importantly, why.

Now we help brands take action on it.

Goodie tracks how often your domain (and your competitors) are being cited across tools like ChatGPT, Gemini, Claude, Perplexity, and more. But we don’t just hand over this data and leave you to figure out the next steps on your own. We turn it into a strategy for your business.

✅ Domain Influence Tracking: See where your brand is (or isn’t) getting mentioned in AI responses, by model, by category, and over time.

✅ Competitor Benchmarking: Understand how your citation footprint stacks up against direct competitors and content category leaders.

✅ Visibility Gap Analysis: Find out where your brand is missing from the conversation, and which publishers or content types are driving visibility in your space.

✅ Strategic Placement Plans: Prioritize the content ecosystems that matter most (affiliate, publisher, reference, or UGC) based on real AI model behavior.

If you’re invisible in AI answers, you’ve got a citation problem on your hands.

We help finance brands:

AI is doing more than just changing how people search; it’s changing how trust itself is distributed.

The most visible brands in AI aren’t necessarily the biggest. They’re the ones being mentioned, cited, and embedded in the content models they rely on to answer real user questions. That means visibility isn’t about who shouts the loudest but instead about who gets referenced when it counts.

In the world of AI answers, not being cited means you may as well not exist.

We built Goodie to change that.

Because citations are the foundation of visibility in AI answers. Tools like ChatGPT, Gemini, and Perplexity don’t “rank” content the way Google does; they reference sources they trust. If your brand isn’t being cited, it’s not part of the answer.

Not anymore. GenAI models rarely cite brand.com blogs, especially when more neutral or established third-party sources are available. To show up, you need to be mentioned in the content that AI actually pulls from: affiliates, publishers, product roundups, explainers, and reference-style articles.

You can (and should) optimize your content structure, but that’s just one part of it. GenAI visibility is also shaped by what others say about you. Earning citations through third-party content, affiliate mentions, and PR is now just as important as your on-site SEO.

SEO is about rankings. AEO (Answer Engine Optimization) is about inclusion. You don’t need to be in the top three blue links; you need to be in the source list that AI models pull from when constructing an answer. Different mechanics, different strategy.

That’s exactly what our Domain Influence Tracker measures. We show you how often your brand is cited in GenAI responses, how you compare to competitors, and where your gaps are, by model, content type, and topic.

It starts with understanding why. Are you absent from the publisher content GenAI pulls from? Do affiliates mention competitors but not you? Are you missing from reference-style explainers entirely?

Once we identify the gaps, we help you build a strategy to earn citations where they actually count, not just create more content and hope it lands.

Original study by Mostafa ElBermawy. Special thanks to: