Last Updated: January 2026

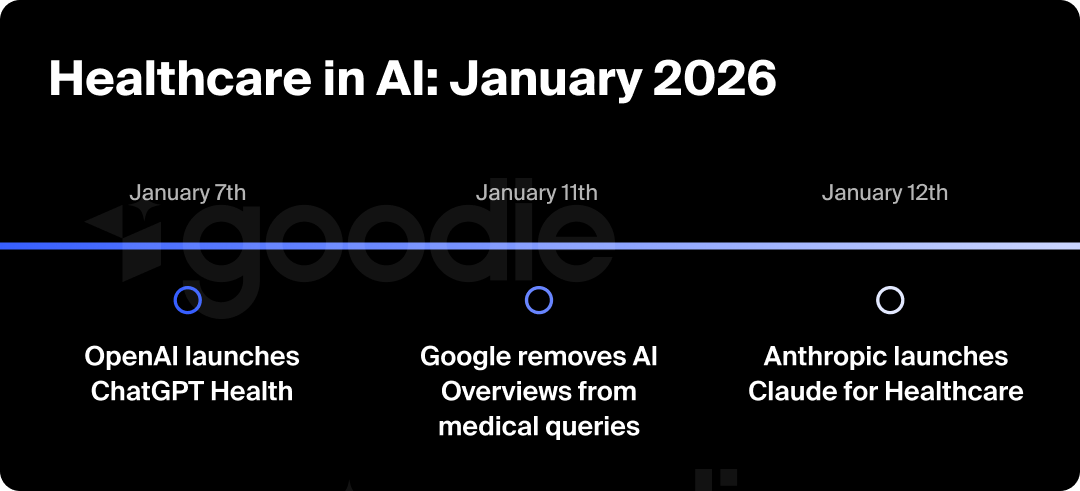

We’ve just started 2026, but the first half of January is once again shaping up to be a defining moment for AI, this time in the healthcare space. Within just days, three tech giants made moves that reveal where this industry is heading… and surprise, surprise, just like with almost anything regarding AI, it's all happening fast:

Here's what makes this significant: over 40 million users across the world are already asking ChatGPT health-related questions. And that’s a daily measurement just for ChatGPT. Most of these conversations are happening outside of normal clinic hours, when people can't easily reach their doctors.

AI has quietly become the first stop for health information, and these recent announcements show the industry racing to meet (and shape) that demand.

Whether you're in healthcare marketing, working with healthcare clients, or just trying to understand where this technology is headed, here's what you need to know.

Note: This article will be updated throughout 2026 with all major news regarding healthcare and AI.

On January 7, 2026, OpenAI unveiled ChatGPT Health, a dedicated, encrypted space within ChatGPT where users can connect their medical records and wellness apps for personalized health guidance.

What Users Can Connect:

Key Features:

How It Was Built: OpenAI worked with 260+ physicians across 60 countries who reviewed model outputs 600,000+ times. They created HealthBench, an evaluation framework that measures safety, clarity, and whether the AI appropriately encourages follow-up care with real doctors.

ChatGPT Health Use Cases:

OpenAI's CEO of Applications, Fidji Simo, shared how ChatGPT caught a potentially dangerous antibiotic prescription that could have reactivated a serious infection from her past; something that the resident physician missed because health records aren't organized to easily show that context.

The Big Disclaimer: OpenAI has stated that ChatGPT Health isn't for diagnosis or treatment. It’s designed to support medical care (not replace it). Users are urged to think of it as a health assistant that helps you navigate the system.

Availability: Rolling out first to limited early users, then expanding to all ChatGPT Free, Go, Plus, and Pro users (outside the EEA, Switzerland, and UK) in the coming weeks.

Five days after OpenAI's announcement, Anthropic launched Claude for Healthcare, and unlike OpenAI, they positioned it as more than just a patient-facing chatbot.

What Makes It Different: While ChatGPT Health focuses heavily on consumers, Claude targets the entire healthcare ecosystem: providers, payers, and patients. We’re seeing this as a B2B2C play.

The Enterprise Features: Claude connects to authoritative healthcare databases and systems:

The Big Use Case: Prior Authorization Automation: One standout feature is automating prior authorization (the process where doctors submit documentation to insurance companies to get treatments approved).

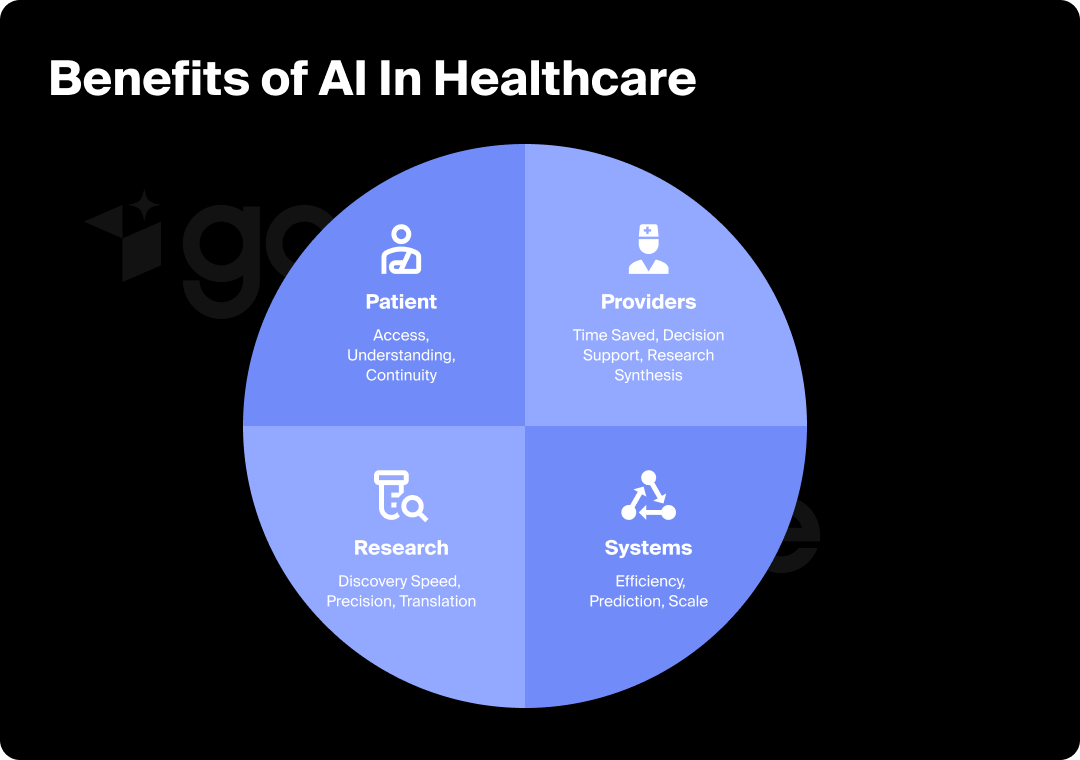

As Anthropic CPO Mike Krieger pointed out, "Clinicians often report spending more time on documentation and paperwork than actually seeing patients." This is exactly the kind of administrative burden that AI can genuinely help with.

For Different Users:

Like OpenAI, Anthropic emphasizes that health data won't be used for model training and that Claude is designed to augment, not replace, healthcare professionals.

While OpenAI and Anthropic were pushing forward, Google was backpedaling. On January 11, 2026, following a Guardian investigation, Google removed AI Overviews from certain health searches.

The problem? The Guardian found that Google's AI Overviews provided health information that could mislead users. Let’s take an example: say a user searches for "normal range for liver blood tests,” and is given reference numbers that don’t account for things like age, sex, or nationality. There’s a huge potential there that could make someone think their normal results were concerning (or vice versa).

What Happened:

Google's Response: A Google spokesperson said they don't comment on specific removals but emphasized they "make broad improvements." An internal clinical team reviewed the flagged queries and found that "in many instances, the information was not inaccurate and was also supported by high-quality websites."

The Bigger Issue: As Vanessa Hebditch from the British Liver Trust noted, removing AI Overviews from one search is "excellent news," but the bigger concern is that "it's not tackling the bigger issue of AI Overviews for health."

The incident highlights a critical tension: even technically accurate information can be dangerous in healthcare without proper context. And even the most well-resourced companies with clinical teams struggle to get this right at scale.

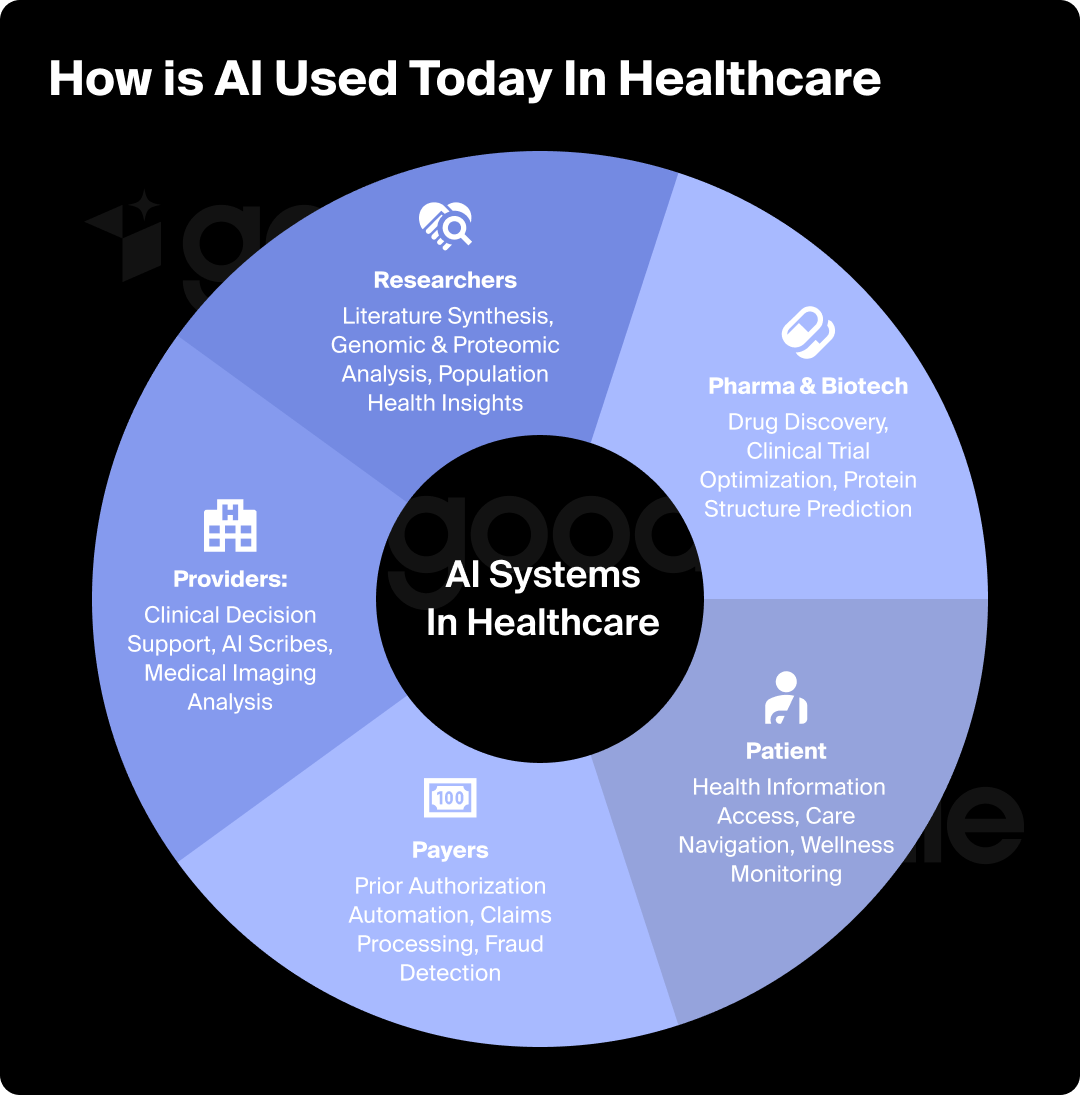

Now that we’ve covered the most recent developments in the healthcare space when it comes to AI, let’s zoom out a bit. Over the last year, AI has moved from experimental to mainstream across healthcare. Here's where you'll find it in action:

For Providers:

For Payers:

For Patients:

For Pharma & Biotech:

For Researchers:

Usage Patterns: Most health-related AI conversations happen outside of normal clinic hours, when people can't easily reach their doctors. This suggests AI is filling real access gaps in the healthcare system.

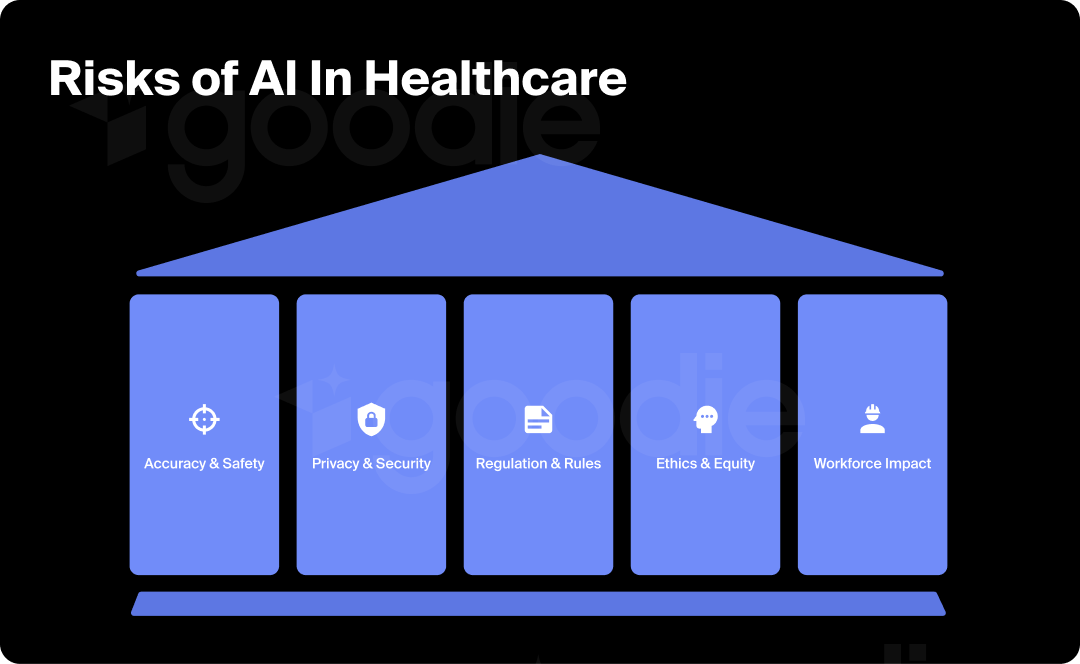

AI models predict likely responses; they don't reason from verified medical knowledge. This creates real problems:

The Google incident is a great example of how even technically accurate info can still turn out to be misleading without proper context.

While ChatGPT Health and Claude offer enhanced encryption and isolation, at the end of the day, users are still trusting commercial companies with complete medical histories.

Near-Term (2026-2027):

Emerging Technologies:

Regulatory Evolution:

We won’t lie: the timeline is aggressive. The technology is improving fast. But whether AI genuinely improves healthcare or creates new problems depends on choices being made right now by tech companies, healthcare organizations, regulators, and users.

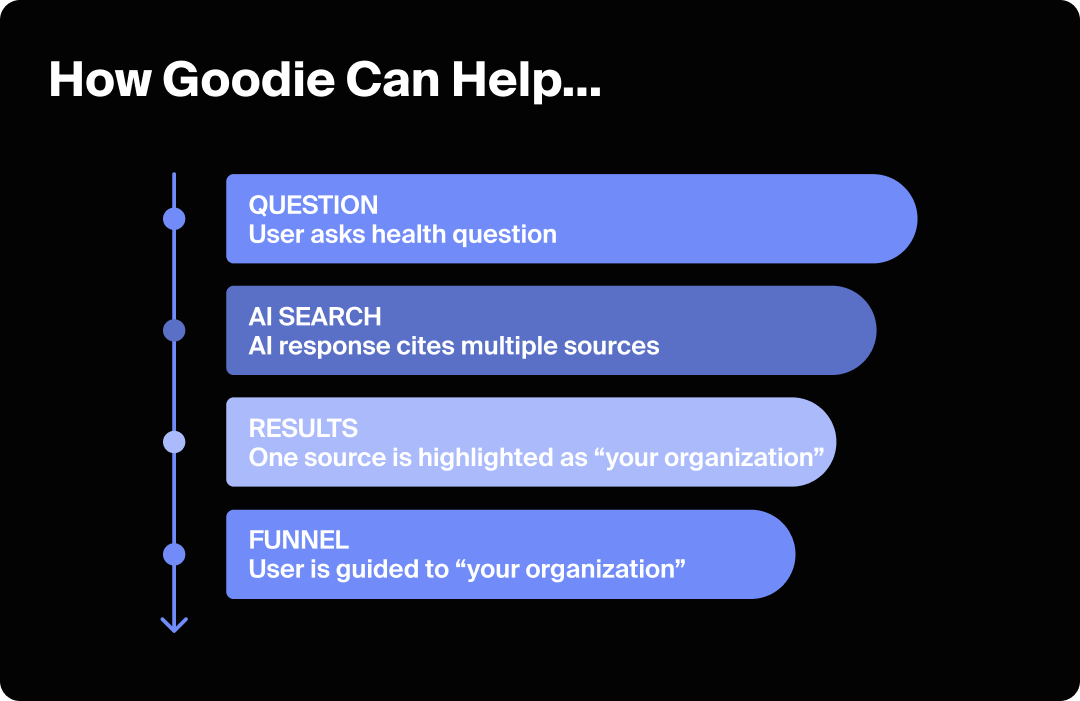

As AI becomes the primary source for health information, healthcare organizations face a new challenge: ensuring accurate representation when millions ask AI about medical topics.

This is where Answer Engine Optimization matters. When someone asks ChatGPT Health about a condition your organization treats, are you cited as an authority? When Claude discusses treatment options, does it reference your research?

Goodie's AEO platform helps healthcare organizations monitor visibility across AI search engines, track brand mentions and sentiment, identify optimization opportunities, and measure how you compare to competitors in the AI ecosystem.

For healthcare marketers, Goodie provides the insights needed to develop effective AI visibility strategies, while our AEO Content Writer helps create content optimized for both traditional search and AI platforms.

In an era where 230M+ people weekly turn to AI for health guidance, maintaining visibility isn't optional. Learn more about AEO for healthcare.

AI in healthcare uses machine learning, natural language processing, and other technologies to improve medical care, clinical workflows, and patient outcomes. It includes everything from diagnostic tools that analyze medical images to chatbots that answer health questions to algorithms that predict disease risk.

At its core, AI identifies patterns in large datasets (think medical images, health records, genomic sequences, and patient symptoms) to support diagnosis, treatment decisions, research, and patient care.

That being said, recent launches like ChatGPT Health and Claude for Healthcare represent a shift toward more conversational AI that integrates multiple data sources to provide personalized guidance.

Examples span the entire healthcare ecosystem:

AI in healthcare is sort of a gray area; whether it’s “safe” or not largely depends on the application. Clinical-grade AI tools with FDA clearance and physician oversight are generally safe for their intended purposes. On the other hand, consumer chatbots like ChatGPT Health are safer for general information but risky for high-stakes medical decisions.

Key safety concerns:

Companies like OpenAI and Anthropic have implemented safety measures: physician collaboration, enhanced privacy, explicit warnings against using AI for diagnosis. But these systems still work by predicting likely responses, not reasoning from verified medical knowledge.

Trust involves technical security, legal protections, and long-term data governance. ChatGPT Health and Claude for Healthcare implement strong encryption and data isolation, which is good. But consumer AI health tools aren't covered by HIPAA, so your data protection relies on company privacy policies that can change.

Dr. Danielle Bitterman from Mass General Brigham suggests: "The most conservative approach is to assume that any information you upload into these tools...will no longer be private."

Accuracy varies based on the question type:

More reliable for:

Less reliable for:

OpenAI worked with 260+ physicians to improve accuracy and created HealthBench to evaluate responses. But AI still hallucinates occasionally, and users can't easily detect errors.

Important: Even when factually correct, AI advice can be contextually inappropriate for your specific situation. Always verify with healthcare providers for anything important.

Quick evaluation checklist:

✓ Check if AI acknowledges uncertainty and limitations

✓ Verify through multiple reputable sources (CDC, Mayo Clinic, specialty organizations)

✓ Be skeptical of very specific personal recommendations

✓ Look for appropriate encouragement to consult healthcare providers

✓ Try to verify specific statistics or study citations

✓ Use common sense (if it seems wrong, it might be)

✓ Compare responses across different AI platforms

Remember: AI is best for starting points and learning, not for authoritative medical advice. When in doubt, ask a real doctor.

AI in healthcare has real promise: reducing administrative burden, democratizing health information access, accelerating research, and supporting better clinical decisions. But the challenges (accuracy, privacy, regulation, equity) remain significant and unresolved.

What happens next depends on choices being made now by tech companies, healthcare organizations, regulators, and users. Will AI genuinely improve healthcare or exacerbate existing problems? Will it support clinicians or undermine them? Will it empower patients or mislead them?

The answers will emerge over the coming months and years. For healthcare organizations, staying informed isn't optional. Understanding how AI is reshaping everything from information access to care delivery is essential for remaining relevant in an AI-mediated healthcare landscape.

---

Some icons and visual assets used in this post, as well as in previously published and future blog posts, are licensed under the MIT License, Apache License 2.0, and Creative Commons Attribution 4.0.

https://opensource.org/licenses/MIT