Choosing the right partner for AI Search or Answer Engine Optimization can make or break your discoverability strategy. But if your procurement process relies on a generic request for proposal, you're likely to waste weeks reviewing proposals that miss the mark on what modern search requires.

A well-designed RFP template does more than collect vendor information. It forces AI search agencies to prove they understand relevance tuning, experimentation frameworks, and how to optimize for answer engines like ChatGPT, Perplexity, and Google's AI Overviews. When you start with the right structure, you filter out generalists and surface partners who can move the needle on search performance.

An RFP invites vendors to propose their own approach to solving your stated problem.

An RFP says here's the problem we need to solve and the outcomes we need to achieve, show us how you'd approach it. This matters for AI search and AEO work because there isn't one right solution. Different agencies have different methodologies for relevance tuning, experimentation, and answer engine optimization. Some might recommend a complete platform migration, while others propose optimizing your current system with targeted improvements.

The RFP format lets agencies demonstrate expertise by explaining their recommended approach, showing case studies of similar projects, and providing detailed methodology for how they'd diagnose issues and measure success. You're evaluating both what they propose and how they think, not just comparing prices for identical work.

RFPs also include evaluation criteria beyond cost: technical capability, team experience, implementation timeline, ongoing support model, and cultural fit. You might select a more expensive proposal because the agency demonstrated deeper understanding of your specific search challenges or proposed a more innovative testing framework.

For complex, strategic projects like modernizing search or optimizing for answer engines, the RFP format gives you much more signal about which partner will deliver results rather than just the lowest price.

A request for proposal is a formal document that outlines your project needs and invites vendors to submit detailed proposals explaining how they'd deliver those requirements. Unlike an RFQ (request for quote or quotation), which asks vendors to price out a predefined solution, an RFP leaves room for agencies to recommend their own approach based on your strategic goals.

For AI search and AEO work, this distinction matters. Search optimization isn't commodity work where you can just compare line item costs. You need to evaluate methodology, technical capability, and whether an agency has real experience tuning relevance algorithms or building structured data for answer engines.

A strong RFP template structures this evaluation process by placing project context and outcomes at the front before diving into technical requirements. This helps agencies understand what success looks like and craft proposals that speak to your business goals rather than just listing generic capabilities.

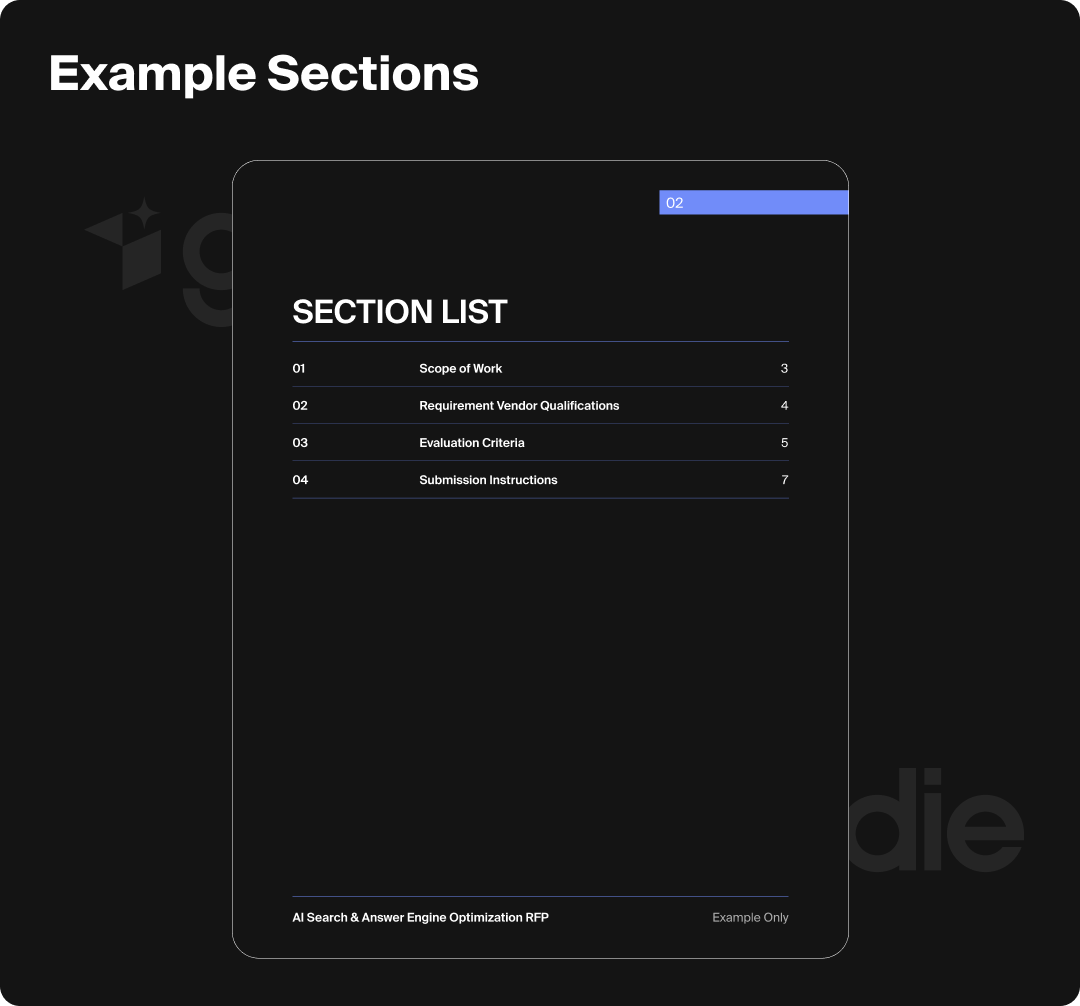

A complete RFP runs 15 to 30 pages and follows a consistent structure that makes it easy for vendors to navigate and respond systematically. Formal RFPs begin with a cover page that includes the project title, issuing organization, RFP number, and submission deadline.

The document then progresses through several core sections that build a complete picture of your needs and evaluation process.

This section explains why you're seeking proposals now. For AI search projects, you might describe current search performance issues like low CTR, poor answer engine visibility, or inability to surface relevant product information. The intent section sets strategic context rather than jumping straight into technical specifications.

Here you define what the selected vendor will deliver. For AI search, this might include search relevance audits, implementation of ranking algorithms, structured data markup for answer engines, A/B testing infrastructure, and ongoing optimization. Effective scope sections describe deliverables and outcomes, not just activities.

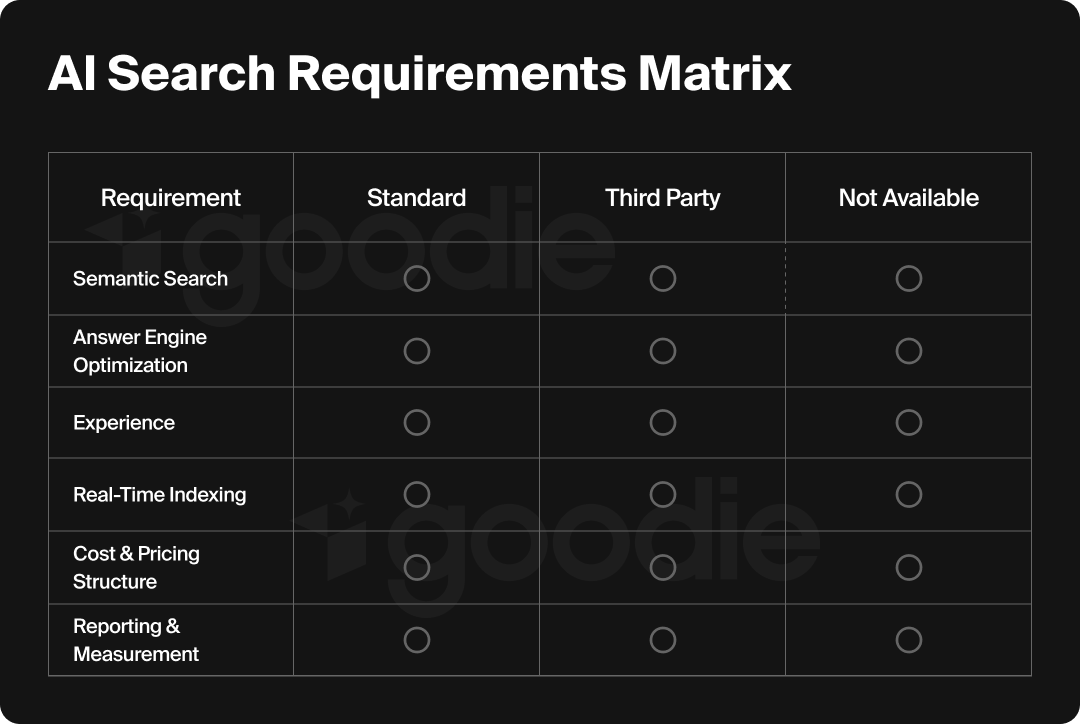

This table breaks down functional and non functional requirements with columns indicating whether each capability needs to be standard, customized, or integrated with third party tools. For AI search RFPs, this is where you'd detail requirements around indexing architecture, natural language processing, semantic search, query understanding, and analytics dashboards.

This section requests specific information about the agency: years in business, number of clients, relevant case studies, team composition, and support coverage. For AI and AEO work, you'd also ask about experience with specific search platforms, familiarity with answer engine guidelines, and any proprietary tools or methodologies they've developed.

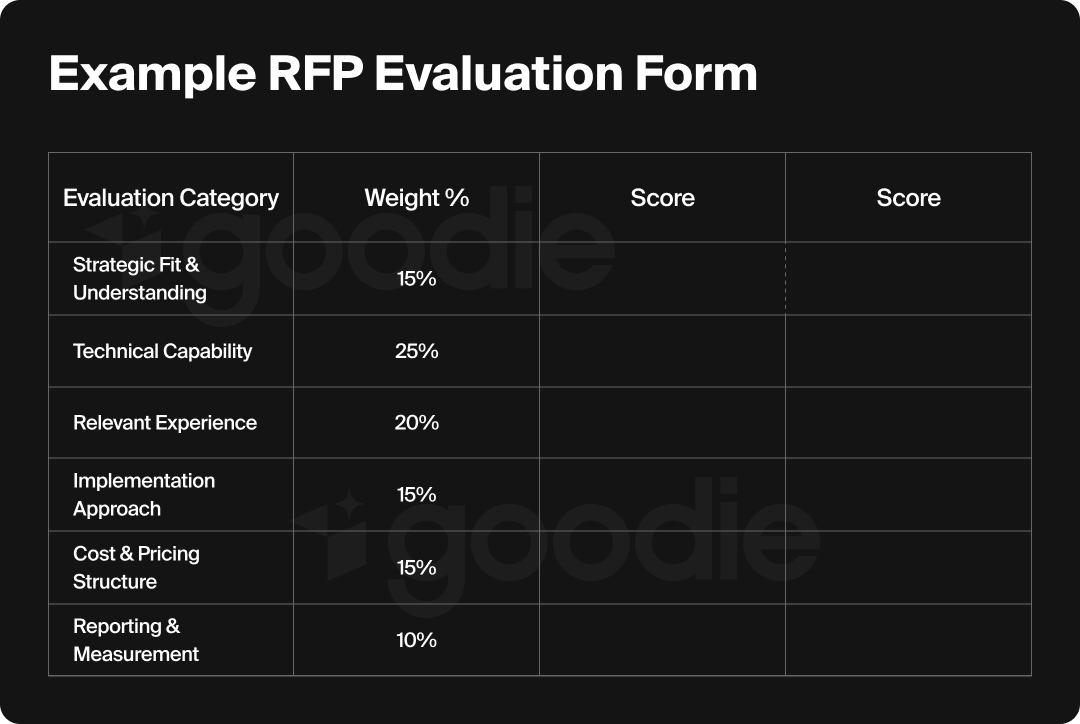

Transparent evaluation criteria tell vendors how you'll score proposals. Common categories include technical capability, understanding of requirements, implementation approach, cost, vendor experience, and innovation. Each criterion receives a numeric weight, making it clear which factors matter most.

The final section specifies format requirements, number of copies, submission method (electronic or physical), deadline, and contact information for questions. Detailed submission instructions reduce confusion and ensure you receive proposals in a format that's easy to evaluate.

Appendices include required forms like vendor information sheets, non disclosure agreements, pricing tables, and any technical specifications or compliance documents vendors need to address.

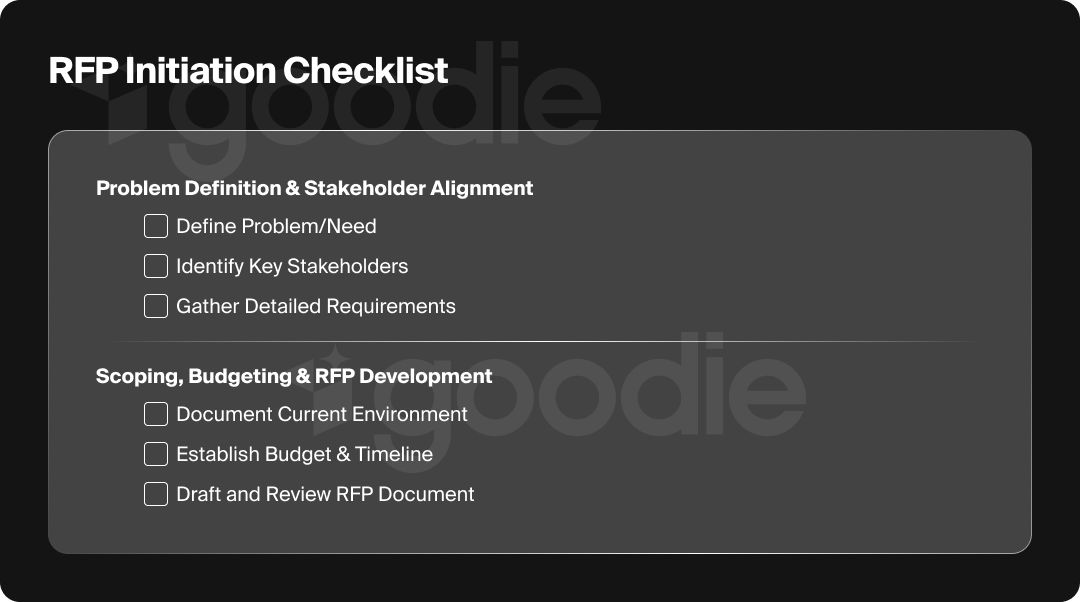

Starting an RFP process requires upfront planning before you write a single word. The initiation phase involves defining the problem, identifying stakeholders, gathering requirements, and aligning on evaluation criteria.

What's broken about your current search experience? Maybe customers can't find products even when searching by name. Maybe your content doesn't appear in ChatGPT or Perplexity results. Maybe internal site search returns irrelevant results 30% of the time. Start by documenting specific pain points with data: bounce rates, zero result searches, conversion rates, answer engine visibility metrics.

AI search projects touch multiple teams. Marketing needs discoverability and brand presence in answer engines. Product teams need customers to find features quickly. Engineering needs to understand technical architecture and integrations. Legal and privacy teams need to review data handling practices. Bring these stakeholders together early to capture requirements from each perspective.

Run workshops or interviews with stakeholders to collect specific requirements. Tag each requirement as must have, should have, or nice to have so vendors understand what's negotiable. For AI search, must haves might include semantic understanding of natural language queries, real time indexing, and structured data implementation. Nice to haves might include predictive search suggestions or personalization features.

Vendors need to understand your starting point. Describe your current search infrastructure, data volumes, query patterns, traffic levels, and existing integrations. Include baseline metrics so agencies can quantify improvement potential.

Define what good looks like with concrete KPIs. For AI search, this might include increasing answer engine visibility by 40%, improving search click through rate to 35%, reducing zero result queries to under 5%, or increasing conversion from search traffic by 20%.

Decide on your budget ceiling and project timeline before issuing the RFP. Sharing budget ranges in the RFP helps agencies propose realistic solutions rather than wasting everyone's time with proposals that are over or under your target.

Create the first draft using a structured template, then circulate it to stakeholders for review. Using collaborative tools lets cross functional teams comment and edit together, which catches ambiguous requirements and missing details before vendors see them.

The complete RFP process moves through seven distinct phases, each with specific activities and outcomes.

This is where you define objectives, identify stakeholders, and determine scope. For AI search projects, planning includes documenting current search performance, setting target KPIs, and deciding whether you need a full search platform replacement or just optimization of your existing system. Planning also involves identifying internal resources: who will serve on the evaluation committee, who manages vendor relationships, and who owns implementation once a vendor is selected.

Now you write the RFP document following a proven template structure. Start with sections that explain project background and desired outcomes before diving into technical requirements. Keep language clear and specific rather than using vague terms like best in class or enterprise grade. For AI search, spell out which search scenarios you need to support: product search, content discovery, customer support, or something else entirely.

Circulate the draft to all stakeholders for feedback. Legal reviews contractual terms and compliance requirements. Finance reviews budget and payment terms. Technical teams review feasibility of requirements. Marketing reviews how the RFP positions your organization. Incorporate feedback and get final sign off from decision makers before release.

Publish the RFP through whatever channels make sense for your market. This might mean posting it on your procurement portal, sending it to a shortlist of prequalified agencies, or listing it on RFP databases. Include a clear question and answer period where potential vendors can submit questions, and publish answers to all vendors maintain fairness.

Give vendors adequate time to respond, 3 to 6 weeks for complex projects like AI search implementation. During this phase, vendors will request clarifications, which you should answer in writing and share with all participants.

Use a structured scoring rubric to rate each proposal against your published criteria. Have each evaluation committee member score independently, then meet to discuss scores and identify finalists. Common practice is to shortlist 2 to 3 vendors for final presentations or proof of concept demonstrations. Document strengths, weaknesses, and risks for each proposal to support your final decision.

Choose your vendor, notify all participants of the outcome, and begin contract negotiation. Best practice is to debrief unsuccessful vendors so they understand why they weren't selected, which maintains relationships and improves future responses.

A specialized template for AI search and AEO projects should include all the standard RFP sections plus requirements specific to modern search technology and answer engine optimization.

Results-driven RFPs start by listing the business outcomes you need: increased revenue from search traffic, higher answer engine visibility, improved customer satisfaction with search results, or reduced support costs from better self service search. This frames the project in business terms before diving into technical details.

Create a structured requirements table where vendors indicate whether each capability is available out of the box, requires configuration, needs custom development, or isn't available.

For AI search, break requirements into categories:

Ask vendors to detail their implementation approach including phases, milestones, roles and responsibilities, resource requirements, data migration plan, training program, and risk mitigation strategies. For AI search projects, this should include their methodology for baseline measurement, relevance tuning cycles, and how they'll prioritize optimization efforts.

Require vendors to provide specific examples of similar AI search or AEO projects they've completed, including the client's industry, project scope, challenges overcome, and quantifiable results. Request contact information for reference clients who can speak to the vendor's expertise and working relationship.

When evaluating case studies, look for agencies that can demonstrate measurable improvements in answer engine visibility and search performance. For instance, agencies specializing in Answer Engine Optimization should be able to show concrete examples of how they've helped brands appear in AI search results across platforms like ChatGPT, Perplexity, and Google's AI Overviews.

Design pricing tables that separate implementation costs from ongoing optimization costs, and break down what's included in each. For AI search, distinguish between one-time costs (platform setup, initial tuning, data migration) and recurring costs (ongoing optimization, support, platform licenses).

Build a scoring matrix with weighted criteria specific to AI search evaluation:

If you're an agency responding to AI search RFPs, structure your response to mirror the buyer's RFP sections while highlighting what makes your approach unique.

Lead with a client-centric summary that demonstrates you understand their specific search challenges and articulates the business value you'll deliver. Reference their stated KPIs and explain how your methodology addresses their priorities.

Create a table that lists every numbered requirement from the RFP and indicates where in your proposal you've addressed it. This makes evaluation easier and shows you've been thorough.

Explain your process for diagnosing search issues, prioritizing improvements, and measuring success. For AI search, detail how you approach relevance tuning, how you incorporate user behavior data, and how you optimize for answer engines specifically.

Include detailed examples of similar projects with specific metrics. Rather than vague statements about improved search performance, show that you increased search revenue by 32% or improved answer engine visibility by 45% for specific clients.

Name the actual people who would work on the project, include their credentials and experience, and clarify which roles are dedicated versus shared resources.

Maintaining a centralized content library of pre-approved answers to common RFP questions speeds up response time while ensuring consistency. Build reusable sections for company overview, methodology explanations, team bios, and standard case studies, then customize the application to each specific RFP.

When you're selecting a partner to modernize your search experience or optimize for answer engines, the RFP process forces discipline on both sides. Buyers must articulate specific goals and requirements rather than vague aspirations for better search. Agencies must demonstrate concrete expertise rather than just claiming to be cutting-edge or innovative.

A well-designed RFP template surfaces the information you need to evaluate whether an agency can deliver results: their methodology for relevance tuning, their experience with answer engine optimization, their approach to experimentation and measurement, and their understanding of your specific search challenges.

The template creates a common framework that makes proposals comparable while still giving agencies room to differentiate themselves through approach and innovation. This balance between structure and creativity helps you find partners who can both execute and think strategically about how AI search connects to business outcomes.

For AI search and AEO work specifically, where the technology and best practices evolve rapidly, the RFP process also reveals which agencies stay current with emerging trends versus those recycling outdated approaches. Agencies at the forefront will reference optimization for ChatGPT, Perplexity, and Google's AI Overviews, not just traditional organic search. They'll talk about structured data, knowledge graphs, and entity optimization, not just keywords and metadata.

Taking time to build a thorough RFP template pays off in faster evaluation, better vendor alignment, and selecting a partner who can move the needle on search performance and answer engine visibility. Whether you're looking to improve your brand's presence in AI search results or overhaul your entire search infrastructure, starting with a structured RFP ensures you're asking the right questions and evaluating vendors on the criteria that matter.