How people research wearable tech like smart watches, rings, activity trackers, and more has shifted from just search results to AI answers. Instead of scrolling through product pages or comparison posts, consumers are now asking AI models like ChatGPT, Gemini, Claude, and Perplexity to explain, compare, and validate devices that track health, fitness, and biometric data. That shift changes who gets visibility and, more importantly, who earns trust.

In this study, we analyzed 37,800 AI citations tied specifically to health and wearable technology queries in the U.S. market. What the data reveals is striking: AI models rarely cite wearable brands directly. Instead, they rely on a concentrated network of third parties to interpret product specs, explain health implications, and translate innovation into understandable guidance.

This post breaks down which wearable tech domains AI models trust most, how trust is distributed across reference sites, video platforms, and review publishers, and why visibility in AI search is no longer about owning attention and instead about earning credibility inside the sources AI already trusts.

This analysis is based on 37,800 AI citations related to health wearables and wearable technology queries in the US market, taken from September 2025 through December 2025. The goal wasn’t to measure rankings or traffic, but to understand which domains AI models actually rely on when generating answers about wearable tech.

Each citation represents a domain explicitly referenced by an AI model when responding to wearable-related prompts, including questions about fitness trackers, smartwatches, biometric monitoring, health accuracy, and device comparisons.

To move beyond raw counts, we analyzed domains using three primary signals:

Domains were also categorized (e.g., reference, video, affiliate/editorial, commerce) to understand what role each type of source plays in AI answers.

Together, this methodology allows us to see not just who appears, but who AI models trust to explain, validate, and contextualize wearable technology, setting the foundation for the patterns and rankings that follow.

The wearable tech AI ecosystem is highly centralized around a small set of interpretive domains, not brands. Across the 37,800 wearable tech citations analyzed, a handful of sources consistently dominate AI answers, with influence dropping off quickly after the top tier.

At the very top:

What’s notable isn’t just who leads, but how quickly influence concentrates at the top. Each of the top-ranked domains captures roughly 5% of total citation share, and influence scores decline sharply beyond this first cluster.

In practical terms, this means that AI models are repeatedly returning to the same few sources to explain wearable technology, regardless of brand innovation or product novelty.

The result is a trust bottleneck:

Wearable tech discovery in AI search is mediated by a small group of domains that specialize in explanation, demonstration, and comparison, not by the companies building the devices themselves.

This concentration sets the stage for the rankings that follow, and helps explain why even well-known wearable brands struggle to appear directly in AI answers. Visibility isn’t distributed evenly; it’s earned by the domains AI models already rely on to make sense of complex, health-adjacent technology.

When you look at wearable tech citations across AI models, a clear pattern emerges: the domains that AI trusts most are not device makers, but translators. These sites specialize in defining concepts, demonstrating usage, and evaluating products in ways AI models can confidently re-use.

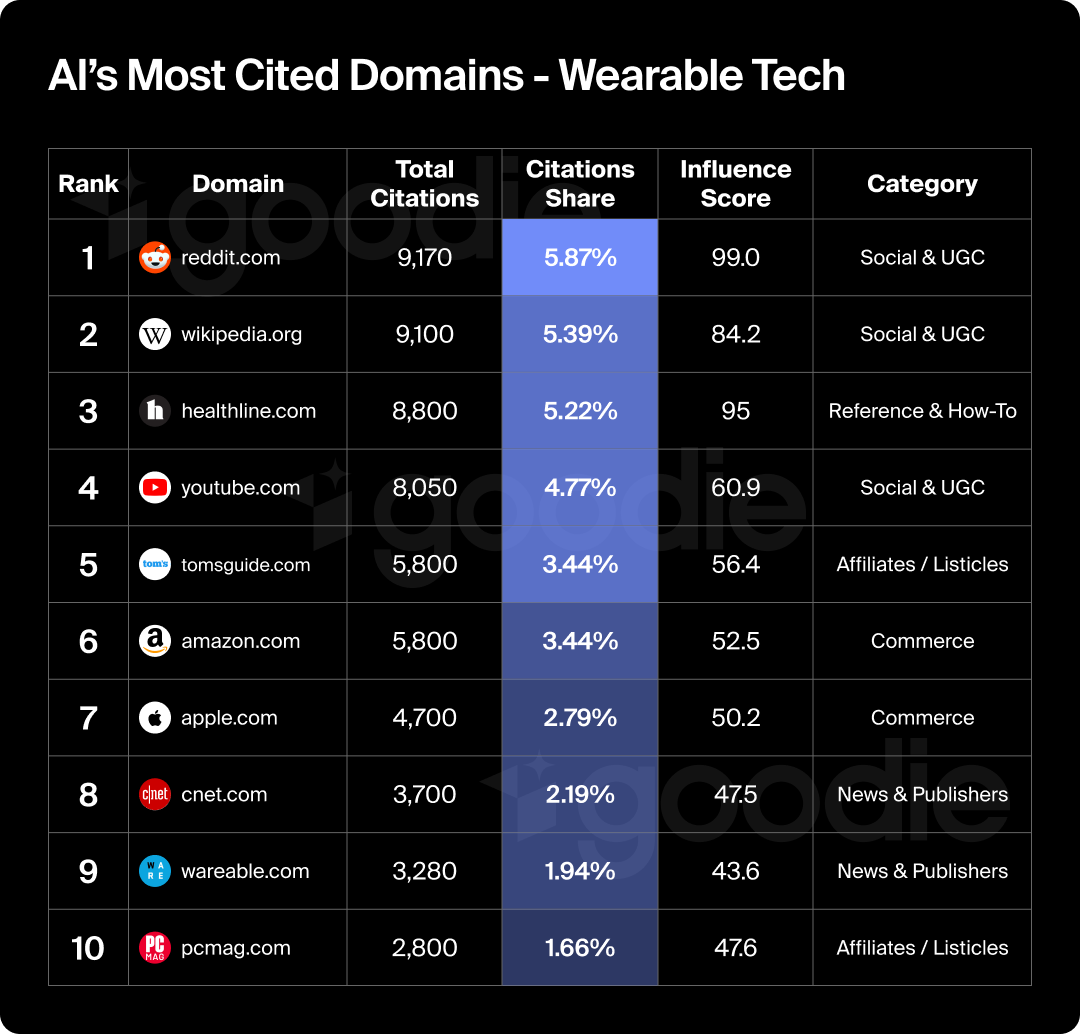

Based on our collected 37,800 wearable tech citations, the following domains appear most frequently across ChatGPT, Claude, Gemini, and Perplexity; ranked by citation volume, share, and overall influence.

A few things stand out immediately:

The most important takeaway is structural: AI models don’t reward ownership of products, but they do reward ownership of understanding.

These domains act as trusted intermediaries, helping AI tools explain wearable technology in ways users can actually act on. In the next section, we’ll break down why these domains win and what they have in common.

When you look across the most-cited wearable tech domains, the pattern isn’t about traffic size or brand recognition. It’s about how well these sources reduce uncertainty for AI models.

Wearable technology sits at the intersection of hardware, software, and health, and the domains that dominate AI citations are the ones that help models explain that complexity clearly and safely.

Domains like Wikipedia consistently appear at the top because they provide:

For AI systems, reference sources act as a grounding layer, establishing what something is before evaluating whether it’s good.

Wearables are experiential products. Metrics like heart rate accuracy, sleep tracking, or workout detection are difficult to understand abstractly, which helps explain why YouTube commands over 5% of all wearable-tech citations.

Video content gives AI access to:

This makes YouTube uniquely valuable for AI answers that need to validate claims beyond manufacturer specs.

Editorial and review websites like Tom’s Guide and CNET consistently outrank brand-owned domains because they translate technical features into outcomes users care about.

These domains excel at:

AI models favor this kind of evaluative content because it mirrors how users make decisions, not how brands market products.

The presence of medical and health reference sites like Healthline highlights an important dynamic: when wearable tech crosses into health, safety and accuracy outweigh novelty.

For AI models, health-adjacent claims require:

This is why even highly innovative wearable brands struggle to earn citations without third-party health validation.

Across all top domains, one theme is consistent:

AI models trust sources that explain, validate, and contextualize; not sources that persuade.

These domains don’t just describe wearable products; they help AI systems reason about them. That distinction explains why trust concentrates where it does and sets up the next question: how AI organizes these sources into a broader trust hierarchy.

Up next, we’ll break down the Wearable Tech Trust Stack and show how these domain types work together to shape AI-generated answers.

AI models don’t pull citations at random. Across the wearable-tech data, citations consistently fall into distinct roles that work together to answer user questions. Think of this as a layered system: each layer solves a different trust problem, and AI models combine them to form a complete response.

What It Solves: “What is this, and how does it work?”

This layer anchors AI answers with:

It’s why reference-style domains consistently appear early in AI responses: models need a factual baseline before making recommendations.

What It Solves: “Can this actually do what it claims?”

Wearables are experiential. AI models lean on this layer to validate:

This layer is especially prominent for fitness tracking, sleep monitoring, and biometric features where specs alone aren’t convincing.

What It Solves: “Which option is best for me?”

Here, AI models rely on domains that:

This layer dominates recommendation-style prompts like “best smartwatch for runners” or “most accurate fitness tracker.”

What It Solves: “Is this safe, accurate, and appropriate?”

When wearable tech intersects with health, AI models introduce more conservative sourcing. This layer provides:

Even lifestyle wearables can trigger this layer when prompts involve heart health, sleep disorders, or biometric monitoring.

The data shows that domains appearing across multiple layers earn higher influence scores than those confined to a single role. AI models prefer to triangulate trust (definitions, demonstrations, evaluations, and safety context working together) rather than rely on one source alone.

This is the structural reason wearable brands struggle with AI visibility: Most brands try to occupy one layer (promotion), while AI trust is built across four.

Next up, we’ll look at model-level differences: how ChatGPT, Gemini, Claude, and Perplexity each weight these layers differently when answering wearable-tech questions.

While the same wearable-tech question can be asked across AI models, the sources they trust and how they assemble answers differ in meaningful ways. The citation data shows that each model applies its own weighting to the wearable tech trust stack, which has direct implications for where brands should focus their visibility efforts.

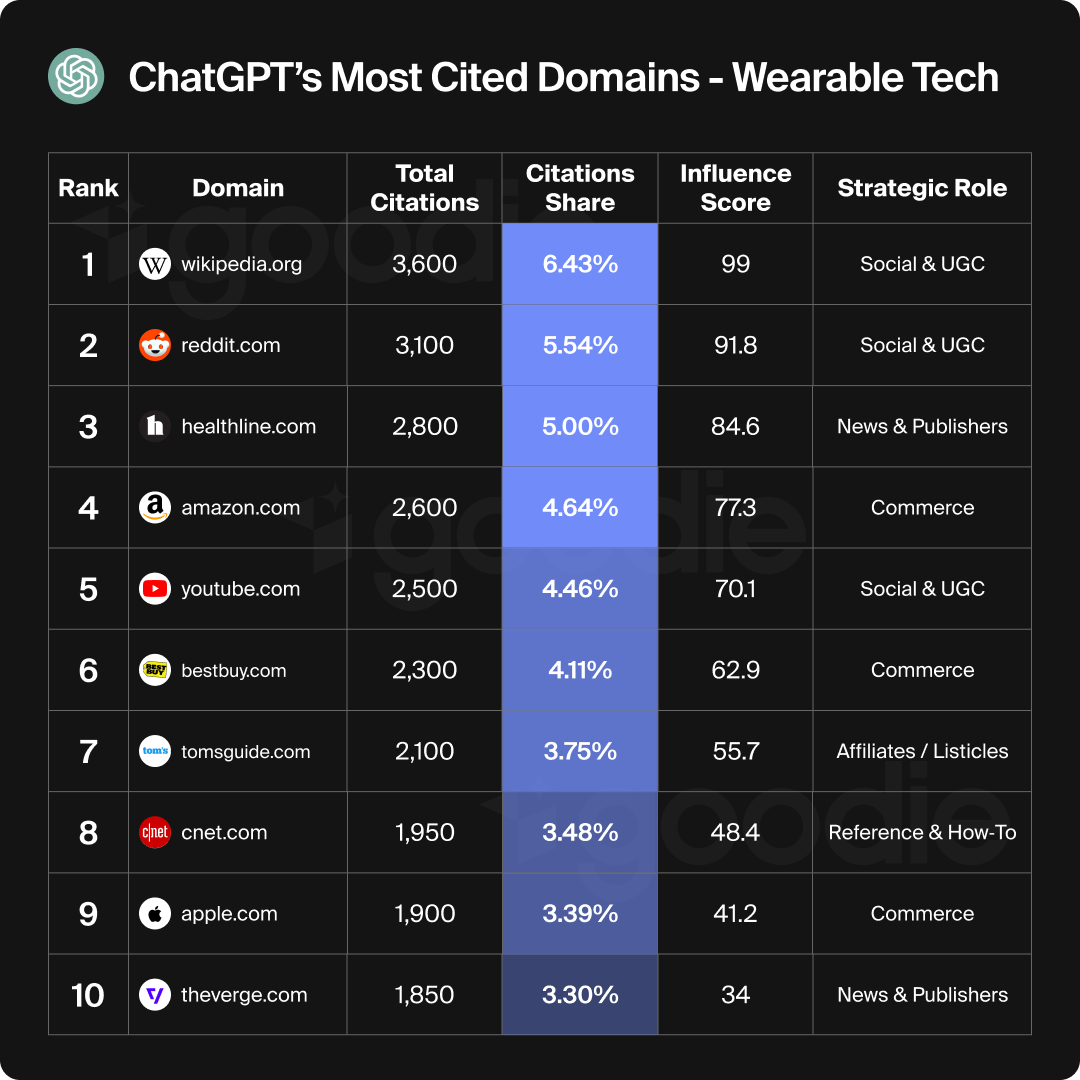

ChatGPT shows the most even distribution of citations across reference, demonstration, and evaluation layers.

What stands out in the data:

Strategic Implication: ChatGPT rewards breadth of presence. Brands benefit most when they’re visible across multiple trusted domains rather than optimizing for a single source type.

Gemini leans more heavily on structured, tech-forward domains and comparison-style content.

What stands out in the data:

Strategic Implication: Gemini favors clarity and comparability. Domains that break down specs, benchmarks, and tradeoffs perform best here.

Claude is the most conservative model when it comes to wearable tech, especially in health-adjacent prompts.

What stands out in the data:

Strategic Implication: For brands making biometric or health-related claims, third-party validation matters most with Claude. Medical and reference inclusion carries outsized weight.

Perplexity behaves most like a research assistant, citing aggressively and favoring domains that already package insights cleanly.

What stands out in the data:

Strategic Implication: Perplexity rewards well-structured, explainer-ready content. If a domain already does the synthesis work, Perplexity is more likely to reuse it.

There is no single “best” domain strategy for wearable tech. AI visibility is model-specific. A strategy optimized for ChatGPT may underperform on Claude or Gemini.

Brands that understand these differences can prioritize the AI systems their customers actually use and focus on the trust layers those models value most.

Next up, we’ll zoom out again and look at category-level patterns inside wearable tech, showing how trust shifts between fitness, lifestyle, and health-driven devices.

Not all wearable tech is treated equally by AI models. When we break citations down by device category and query intent, trust patterns emerge. The more health-adjacent or risk-sensitive the prompt, the more conservative and reference-heavy AI sourcing becomes. Lifestyle and fitness queries, on the other hand, lean toward demonstration and evaluation.

Dominant Trust Layers: Demonstration + Evaluation

Prompts like “best fitness tracker for running” or “accurate calorie tracking wearable” skew toward:

Why: Fitness tracking is framed as performance optimization, not medical risk. AI models prioritize proof of usability and comparative insights over formal validation.

Dominant Trust Layers: Evaluation + Reference

For broader smartwatch queries, AI answers tend to:

Why: These devices sit between lifestyle and health. AI models balance explanation with buying guidance, leaning on domains that translate complexity without overstating impact.

Dominant Trust Layers: Reference + Health & Safety Context

Queries involving heart rate accuracy, sleep disorders, stress monitoring, or biometrics trigger a different behavior:

Why: As perceived risk increases, AI models become more conservative. They prioritize safety, accuracy, and explanatory grounding over optimization or novelty.

Across categories, one rule holds: The higher the health risk implied by the prompt, the narrower and more conservative the AI trust network becomes.

This explains why some wearable brands appear sporadically in fitness-focused answers but disappear entirely in health-related ones. Visibility isn’t just about the product but also about which category of trust the question activates.

Next, we’ll translate these patterns into implications by looking at what this all means for wearable tech brands, and why most remain effectively invisible in AI search today.

The data points to a hard truth: wearable brands are largely invisible in AI search, and it’s by design, not by mistake. Across the most-cited domains, brand-owned sites rarely appear, even when the questions are explicitly about products those brands make.

This isn’t a content volume problem or an SEO execution gap. It’s structural.

AI models are built to minimize risk and maximize interpretability. As a result, they consistently favor third-party validation over self-reported claims. In wearable tech (where accuracy, health implications, and real-world performance matter), brand messaging is treated as inherently biased.

The citation data reinforces this behavior:

Most wearable brands operate almost exclusively in a fifth, untrusted layer: promotion. And that layer is rarely cited.

Wearable tech is one of the fastest-moving product categories, but AI trust lags behind innovation. New sensors, algorithms, and features don’t earn citations on their own. AI models wait for those claims to be:

Until that happens, brands remain absent from AI answers, even if they dominate traditional search or retail channels.

The most important implication is this: AI visibility isn’t something brands can fully control on their own websites.

It’s earned indirectly through presence inside the domains AI already trusts. That shifts the strategy away from publishing more blog posts and toward orchestrating credibility across the trust stack: reference, demonstration, evaluation, and health context.

For wearable tech brands, the question is no longer “How do we rank?” It’s “Where does AI need to see us before it will trust us?”

Next, we’ll translate this reality into strategic next steps: what wearable brands can actually do to increase AI visibility, and where to focus first.

The takeaway from this study isn’t that AI visibility is impossible. It’s that it requires a fundamentally different operating model than traditional SEO or product marketing. The brands that break through in AI search treat trust as something they engineer across systems, not something they publish once and hope for the best.

Here’s how to translate the data into action.

Start by identifying which layers of the wearable tech trust stack you currently occupy and which ones you don’t.

Ask:

Most brands discover they’re visible in zero or one layer, which explains why AI models skip them entirely.

The fastest path to AI visibility isn’t convincing models to trust you but instead it’s appearing inside the domains they already trust.

Based on the citation patterns:

Your strategy should focus on inclusion, accuracy, and consistency across these domains, not just mentions.

AI models don’t reuse marketing language. They reuse clear explanations.

That means:

The easier your product is to explain, the easier it is for AI to cite.

As the data shows, ChatGPT, Gemini, Claude, and Perplexity value different signals.

Instead of spreading effort evenly:

Winning one model well is more valuable than underperforming everywhere.

AI trust isn’t static. Domains gain and lose influence, categories shift, and models evolve.

That means AI visibility needs:

Which leads directly to the final piece of the puzzle: measurement.

Up next, we’ll cover how to track and operationalize AI visibility using Goodie, and how teams can turn insights like these into a repeatable advantage.

Understanding where AI trust lives is only useful if you can monitor, measure, and act on it. AI visibility isn’t static: domains gain influence, models shift preferences, and categories evolve. That’s why wearable brands need ongoing visibility into how and where they appear in AI answers.

With Goodie, teams can:

AI search doesn’t replace traditional analytics, it just adds a new layer. Goodie makes that layer measurable.

This study makes one thing clear: the future of wearable tech discovery is being shaped by AI systems, not search rankings. Across 37,800 citations, trust consistently concentrates around a small network of domains that explain, validate, and contextualize wearable technology, not the brands building it.

The implication is stark but actionable:

The next generation of wearable leaders won’t just ship better devices. They’ll understand how AI models learn, reason, and decide who to trust, and they’ll position themselves accordingly.

If AI is where decisions are being shaped, then AI visibility is no longer optional. The only question is whether you’re observing the shift or actively shaping it.