Marketing attribution has always been about connecting effort to outcome. But as AI systems increasingly shape how people discover, evaluate, and choose brands, that connection is getting harder to see.

Today, buyers don’t just click through results: they ask questions, get synthesized answers, and form opinions inside AI experiences. Those moments rarely show up in analytics, yet they still influence demand, conversions, and revenue.

This article explores how attribution needs to evolve for that reality: expanding beyond clicks and conversions to account for brand visibility, influence, and impact inside AI search (and how marketers can start measuring what actually shapes decisions).

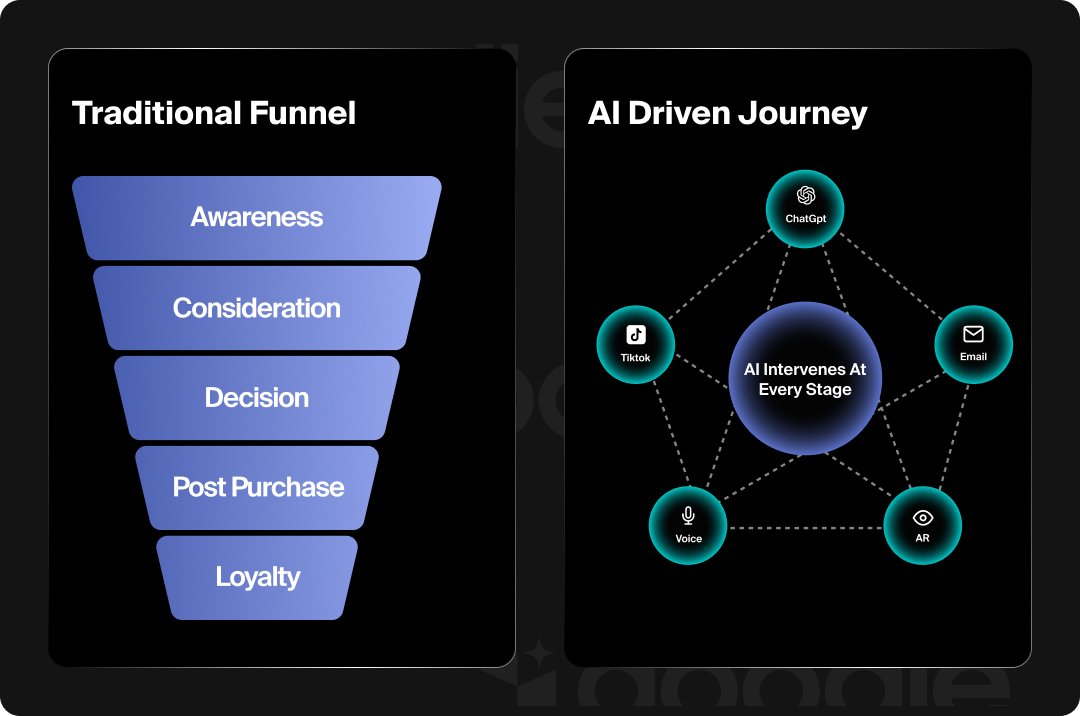

Marketing attribution has traditionally been about assigning credit to marketing touchpoints that lead to a conversion (a click, a form fill, a purchase). That model assumes a fairly linear customer journey: a user sees a campaign, clicks through, and converts. But with AI in the picture now, that journey isn’t so linear.

In AI search and discovery environments (LLMs, AI Overviews, voice assistants, and recommendation engines), brands influence decisions without necessarily generating a visit. Users get answers, recommendations, comparisons, and reassurance directly inside AI interfaces. By the time they search your brand, visit your site, or talk to your sales team, the decision has often already been shaped.

In this context, marketing attribution needs to expand beyond conversion credit to include visibility and influence that happen before, without, or outside the click.

Attribution in AI search is less about pinpointing a single moment of conversion, and more about understanding how demand is formed. That includes:

In short, attribution must account for pre-click influence, not just post-click behavior.

AI search isn’t just another marketing channel to tag or track. Instead, it acts as an intermediary layer between brands and buyers, synthesizing information from across the web and presenting it as a single, authoritative response.

That means:

If attribution only measures what happens after a session begins, it ignores a growing portion of how modern buyers research, compare, and decide.

In the context of AI search, marketing attribution is the practice of measuring how brand visibility, topic presence, and sentiment across AI-mediated discovery environments influence downstream demand, conversions, and revenue.

This doesn’t replace existing attribution models; rather, it extends them. Clicks, sessions, and conversions still matter, but they’re no longer the full story. Attribution frameworks must now connect impressions → influence → outcomes, even when those impressions happen in places that traditional analytics can’t see.

This shift sets the foundation for how marketers should rethink attribution models, dashboards, and success metrics in an AI-shaped discovery landscape, which is exactly what we’ll explore next.

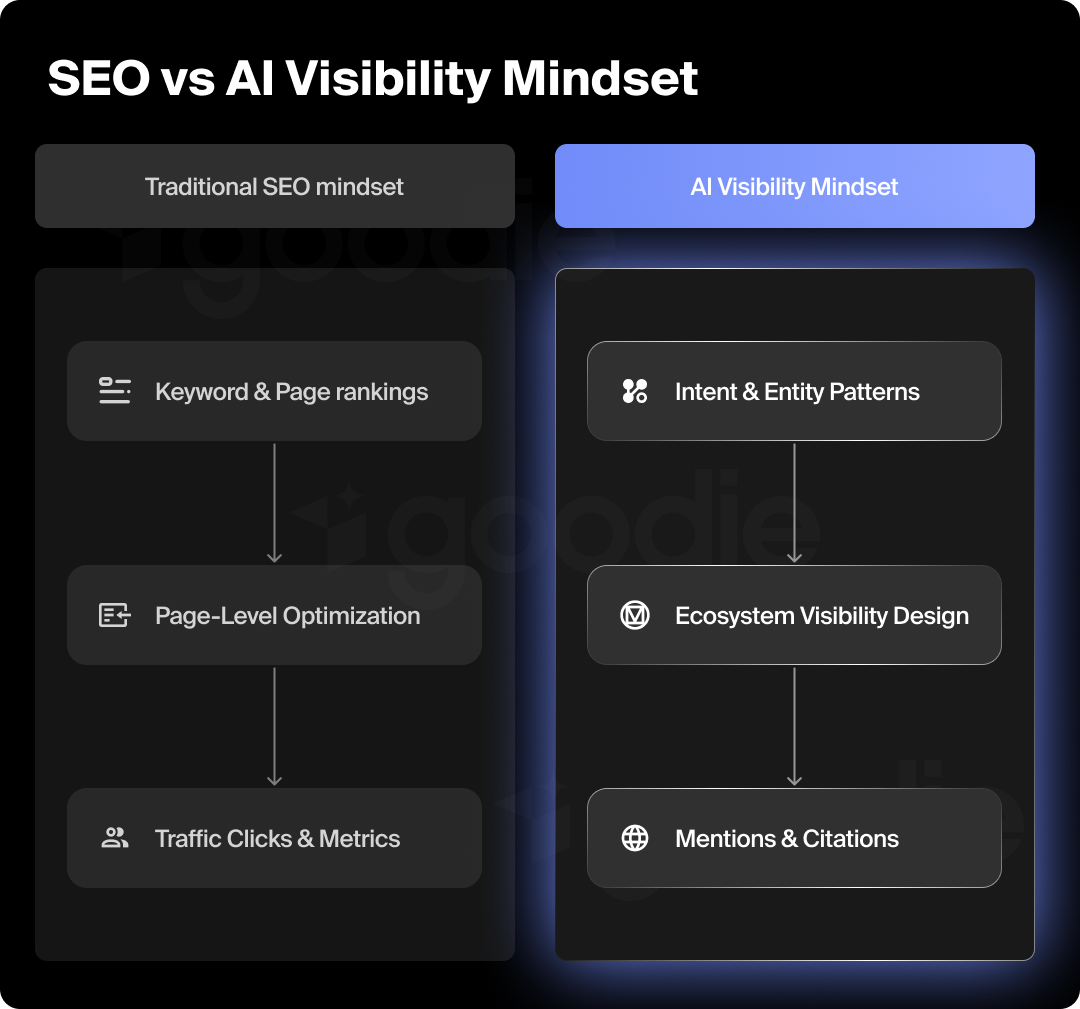

To adapt attribution for AI-driven discovery, it’s important to understand why existing models struggle in this environment. It’s not that our attribution frameworks were built incorrectly, but they were built for a different internet.

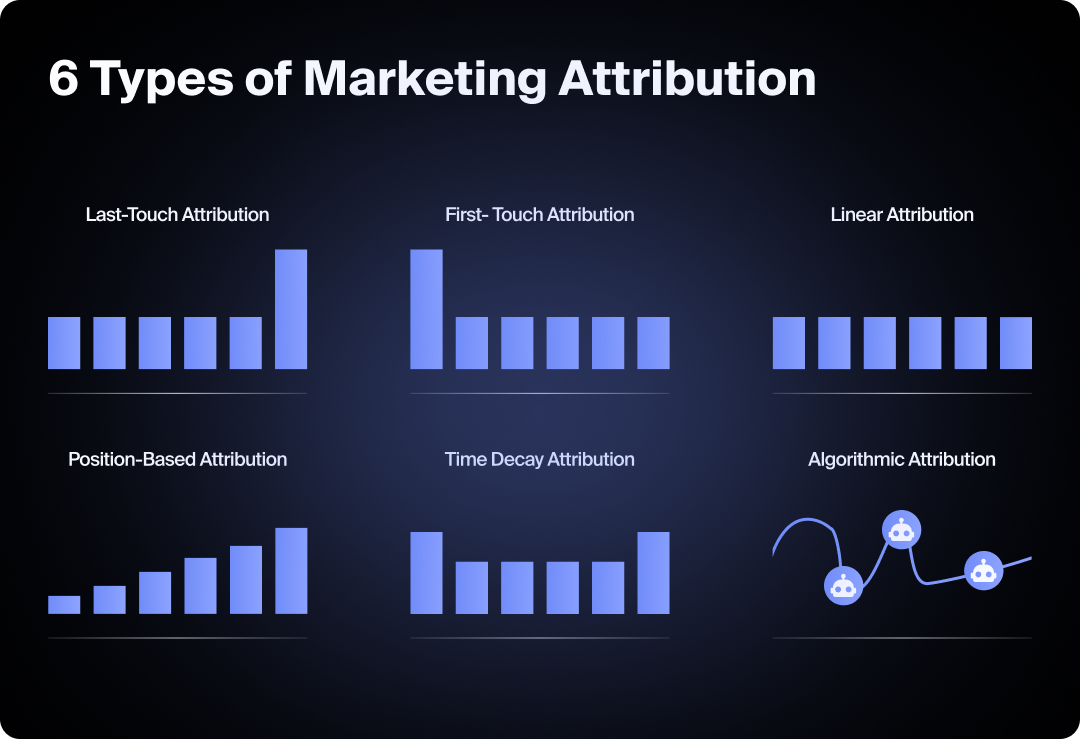

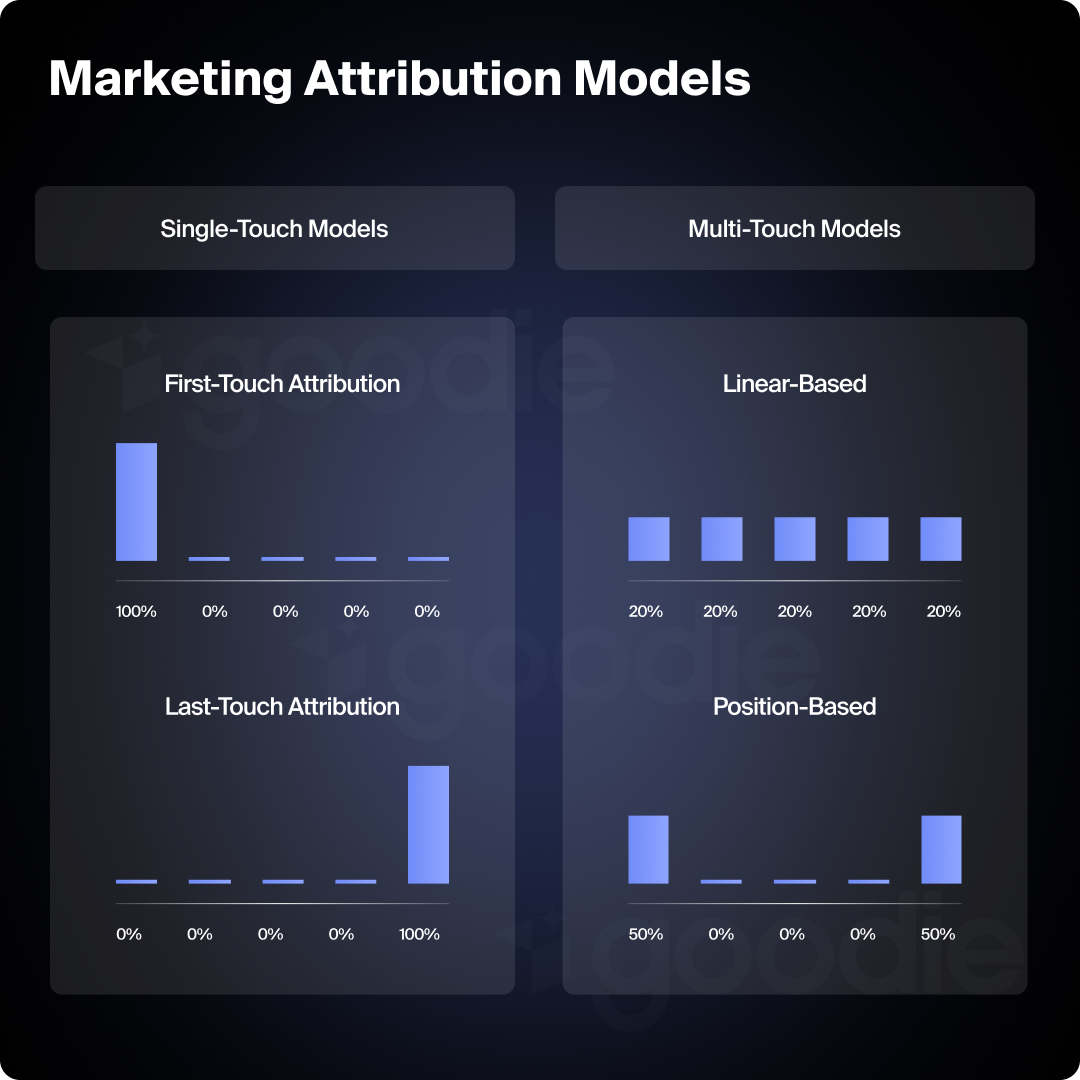

Classic attribution models (first-touch, last-touch, linear, even multi-touch) depend on one core signal: the click.

AI search often removes that step entirely.

When users:

…the influence happens without a measurable interaction. No session is created, no UTM fires, and no touchpoint receives credit, despite the brand playing a meaningful role in the decision.

Traditional attribution assumes influence begins once a user enters a measurable environment (site visit, ad click, form fill). AI shifts influence upstream, into places most analytics stacks weren’t designed to observe.

That includes:

By the time a user converts, attribution tools often capture the outcome, but not the influence that led them there.

Even sophisticated multi-touch attribution models struggle when inputs are incomplete.

AI discovery introduces:

These touchpoints don’t register as cleanly. As a result, attribution skews toward the last measurable action instead of the most impactful one.

When attribution ignores AI influence, marketers tend to:

This creates a distorted view of performance. One that optimizes for what’s easiest to measure, not what actually drives growth.

If attribution models are failing in AI search, it’s not because the math is wrong; it’s because the inputs are incomplete.

AI discovery introduces new forms of influence that don’t show up as clicks, sessions, or conversions, but still shape demand in very real ways. To adapt attribution for AI search, marketers need to expand what counts as a meaningful signal.

This is where attribution starts to shift from tracking interactions to measuring exposure and influence.

In AI search environments, visibility means whether your brand appears at all, and how often.

Key questions attribution needs to answer:

This type of visibility functions like a new kind of impression layer. Even without a click, repeated exposure inside AI answers can:

Ignoring these impressions means ignoring the top of a rapidly growing funnel.

AI systems don’t just mention brands; they associate them with topics, categories, and use cases.

Attribution needs to capture:

This matters because AI recommendations are rarely neutral. Being mentioned as an example, a leader, or an alternative all carry very different levels of influence.

From an attribution perspective, topic visibility helps explain why certain channels or campaigns convert better later, even when the immediate touchpoints don’t show a clear cause.

Not all mentions are equal.

AI answers often frame brands in specific ways:

Attribution systems that only track presence miss this entirely. But sentiment and framing directly affect:

When a buyer converts after exposure to AI content, sentiment often explains why they chose one brand over another, even if that influence never produced a measurable interaction.

While AI visibility itself may be unclickable, its impact shows up elsewhere, just not in obvious ways.

Common downstream signals include:

These signals don’t prove causation on their own, but together, they form patterns of influence that attribution models can learn from and weight appropriately.

Once AI visibility signals are included, attribution stops being a backward-looking exercise and becomes a diagnostic system:

This doesn’t replace existing attribution models and instead extends them upstream, allowing marketers to connect AI-mediated influence to outcomes they already track.

Once AI visibility signals are added to the picture, a hard truth emerges: most attribution models weren’t designed to handle influence without interaction. That doesn’t mean they’re obsolete, but it does mean they need to evolve.

Now, if the panic is starting to set in, rest in knowing that the goal here isn’t to invent a brand-new model for AI search. Instead, we must expand existing attribution frameworks so they can account for probability, influence, and upstream exposure.

That’s a subtle but important shift.

Instead of assigning 100% of the value to a final click or form fill, evolving attribution models:

AI visibility becomes an input to influence, not a competitor to conversion metrics.

In AI search, certainty is unrealistic (and it probably won’t be for a long time).

You often can’t say: “This AI answer caused this conversion.”

But you can say:

Modern attribution models need to:

This makes attribution less binary and far more honest.

Most attribution models start once a user enters a measurable environment. AI influence often happens before that point.

To evolve, attribution needs to:

When attribution only begins at the point of measurable interaction, it captures outcomes (not the forces that shaped them).

AI influence doesn’t map neatly to a single attribution model.

Modern frameworks increasingly:

Attribution becomes a system of interpretation rather than a rigid formula.

What changes:

What doesn’t:

Once attribution models can incorporate AI influence, the next challenge becomes operational: How do you turn impressions and influence into something marketers can actually use?

That’s where the framework comes in.

Once attribution models expand to include AI influence, the question shifts from “what should we measure?” to “how do we structure this in a way that’s usable?”

That’s where an Impression → Influence → Revenue framework becomes useful. Not as a rigid model, but as a way to organize signals that already exist and connect them to outcomes marketers care about.

The first layer captures whether and where your brand is visible in AI-mediated discovery environments.

This is about exposure, not just rankings or clicks.

Impression-level signals include:

These impressions function like a new top-of-funnel layer. They don’t convert on their own, but they shape familiarity, recall, and trust long before a user takes an action you can track.

Impressions alone don’t tell the full story. Influence explains how that visibility actually affects consideration.

This layer focuses on context rather than volume.

Influence signals often include:

Influence is where attribution starts to move from counting appearances to interpreting impact. Two brands may appear equally often in AI responses, but the way they’re framed can lead to very different downstream outcomes.

The final layer anchors everything back to what ultimately matters: revenue and growth.

AI influence rarely maps cleanly to last-touch conversions, but its impact shows up through patterns such as:

Rather than forcing AI influence to “own” revenue, this layer looks at correlation, lift, and contribution.

What makes this structure useful is that it mirrors how buyers actually move:

By separating impressions, influence, and revenue into distinct layers, marketers can:

You don’t have to replace your existing attribution setup; you’re just giving it a missing dimension that reflects how AI now participates in discovery.

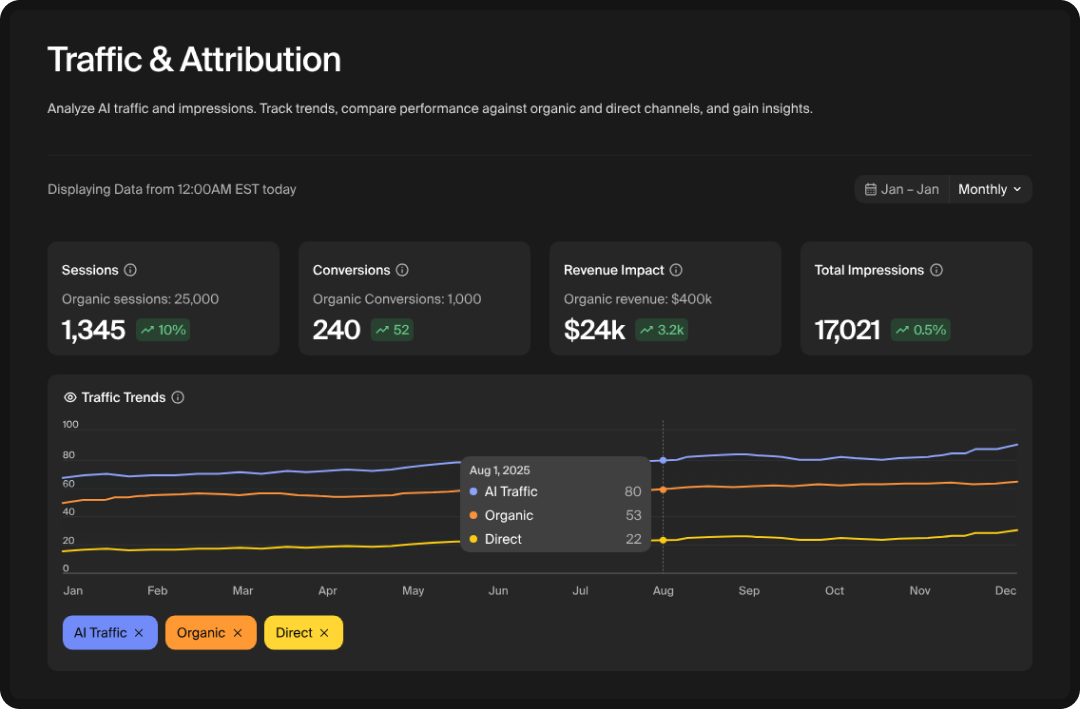

Once this framework is in place, the next step is operational: how these layers come together inside a dashboard that marketers can actually use.

That means combining AI visibility data, analytics, and CRM signals into a single view, without turning attribution into an unreadable science project.

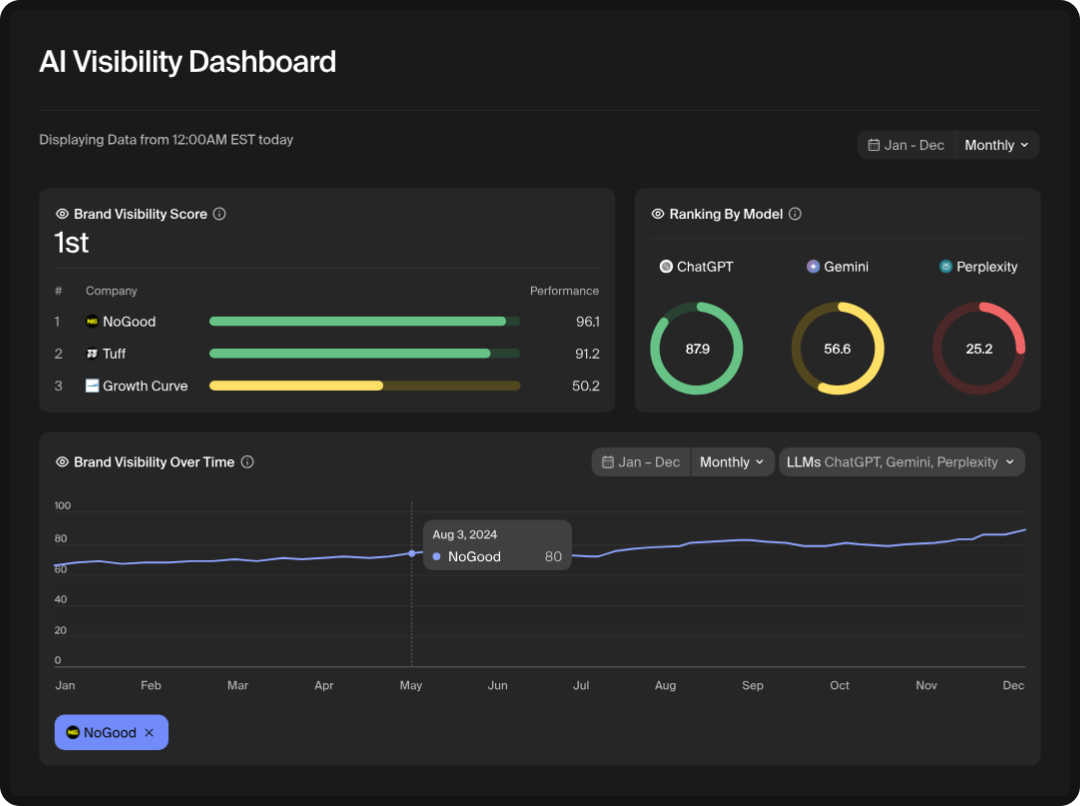

Once impressions, influence, and revenue are treated as connected layers, attribution stops living in separate tools and starts functioning as a single system. A unified AI attribution dashboard brings both new and existing signals into one coherent view.

Most dashboards default to revenue and work backward. An AI-aware attribution dashboard flips that order.

At the top, marketers should be able to see:

This layer answers a simple but critical question: are we even part of the conversation AI systems are shaping?

Without that context, downstream performance is easy to misread.

The next layer interprets visibility rather than just counting it.

Here, dashboards start to show:

This is where marketers begin to understand why certain campaigns, channels, or content strategies perform better later, even when the attribution trail isn’t obvious. Influence metrics turn exposure into insight.

The final layer connects everything back to the outcomes leadership teams care about.

Instead of forcing AI visibility to “own” conversions, a unified dashboard looks for:

This approach keeps attribution honest. AI influence is modeled, not overstated, but it’s no longer invisible.

A strong AI attribution dashboard doesn’t try to answer every question at once. It’s built for different users:

When impressions, influence, and revenue live in the same system, teams stop arguing about attribution models and start aligning around strategy.

AI systems are already shaping discovery, preference, and demand. The brands that adapt fastest won’t be the ones with the most complex models; they’ll be the ones with the clearest picture of influence.

A unified attribution dashboard makes that possible, without pretending the journey is perfectly trackable.

As marketing attribution expands to include AI-driven visibility and influence, expectations matter. While these signals unlock a clearer view of how demand is shaped, they don’t magically make attribution perfect, and pretending they do would undermine trust.

The value of AI-aware attribution isn’t certainty. It’s clarity where there used to be blind spots.

AI-aware attribution frameworks are especially strong at revealing patterns that traditional models miss.

They can:

This makes attribution more strategic. Instead of reacting to last-touch performance, marketers can proactively shape demand upstream.

There are also hard limits (and they’re important to acknowledge).

AI attribution cannot:

Influence in AI search is often diffuse, cumulative, and indirect. Any system that claims otherwise is oversimplifying reality.

Traditional attribution often feels precise because it’s narrow. AI-aware attribution feels messier because it’s more representative of how decisions are actually made.

The tradeoff looks like this:

For most marketing teams, that’s a worthwhile exchange.

Ignoring AI influence makes attribution incomplete.

As AI systems continue to mediate discovery, comparison, and validation, attribution frameworks that exclude these signals will increasingly over-credit the final step and underinvest in what created demand in the first place.

AI-aware attribution doesn’t replace rigor, but it does re-apply it to a more realistic version of the buyer journey.

Marketing attribution has always been about understanding what worked. In an AI-shaped discovery landscape, it’s increasingly about understanding what mattered, even when that influence never showed up as a click.

AI systems now sit between brands and buyers. They summarize, recommend, compare, and validate long before a user visits a website or speaks to sales. When attribution frameworks ignore that layer, they miss data and misinterpret performance. Channels look stronger than they are, brand-building looks weaker than it is, and demand creation gets confused with demand capture.

That’s why modern attribution needs to expand. Measuring AI visibility, topic presence, and sentiment alongside traditional performance metrics gives marketers a more complete picture of how decisions are actually shaped. Not perfect certainty, but better context, better judgment, and better strategy.

This is the gap platforms like Goodie are designed to fill. By making AI visibility and influence measurable and connectable to downstream outcomes, Goodie helps teams understand how AI search participates in their growth, not just whether a campaign converted.

Marketing attribution in AI search focuses on measuring how brand visibility, topic presence, and sentiment inside AI answers influence downstream demand, not just which click led to a conversion. Because AI systems often shape decisions without sending traffic, attribution must account for pre-click and no-click influence that shows up later as branded search, direct traffic, pipeline, or revenue.

Traditional channels create measurable interactions (impressions, clicks, sessions). AI search often creates exposure without interaction. A brand can be recommended, compared, or validated inside an AI response without generating a visit, which means classic attribution models miss that influence unless visibility and influence signals are explicitly included.

Yes. AI-generated answers frequently act as decision-shaping moments. They reduce research time, narrow consideration sets, and validate choices. While the revenue doesn’t show up as “AI traffic,” its impact often appears indirectly through increases in branded search, higher conversion rates on later visits, faster pipeline movement, or improved close rates.

AI influence is measured probabilistically, not deterministically. Instead of tying one answer to one conversion, marketers look for patterns over time: correlations between AI visibility, sentiment, topic ownership, and downstream performance. The goal isn’t certainty; it’s understanding contribution and directional impact.

Some icons and visual assets used in this post, as well as in previously published and future blog posts, are licensed under the MIT License, Apache License 2.0, and Creative Commons Attribution 4.0.

https://opensource.org/licenses/MIT